In many AI applications today, performance is great. You may have noticed that when using large language models (LLM), it takes a lot of time to wait – waiting for the API to respond, waiting for multiple phones to complete, or waiting for the I/O operation.

This is where Asyncio enters. Surprisingly, many developers use LLM without realizing that they can speed up their applications with asynchronous programming.

This guide will walk you through:

- What is asynchronous?

- Get started with asynchronous Python

- Using Asyncio in AI applications with LLM

What is asynchronous?

Python’s Asyncio library can write concurrent code using asynchronous/wait syntax, allowing multiple I/O combined tasks to run effectively in a single thread. The core of Asyncio works with the expected object (usually coroutines) that is an event loop planned and executed without blocking.

Simply put, synchronous code runs tasks one after another, such as standing on a single grocery line, while asynchronous code runs tasks simultaneously, such as using multiple self-checking machines. This is especially useful for API calls (e.g. Openai, human, hugging face), which is waiting for a response most of the time, so that it can be executed faster.

Get started with asynchronous Python

Example: Run a task with and without asynchronous use

In this example, we run simple functions three times in a synchronous manner. The output indicates that each call says_hello() prints “Hello…”, waits for 2 seconds, and then prints “…world!”. Since the call occurs one by one, the waiting time adds up – 2 seconds × 3 calls = 6 seconds. Check The complete code is here.

import time

def say_hello():

print("Hello...")

time.sleep(2) # simulate waiting (like an API call)

print("...World!")

def main():

say_hello()

say_hello()

say_hello()

if __name__ == "__main__":

start = time.time()

main()

print(f"Finished in {time.time() - start:.2f} seconds")The following code shows that all three calls to the SAI_HELLO() function are started almost simultaneously. Each prints “Hello…” immediately, then waits for 2 seconds at the same time, and then prints “…World!”.

Because these tasks run in parallel, rather than one after another, the total time is about the longest single wait time (~2 seconds) rather than the sum of all waits (6 seconds in the synchronous version). This demonstrates the performance advantages of Asyncio for I/O combined tasks. Check The complete code is here.

import nest_asyncio, asyncio

nest_asyncio.apply()

import time

async def say_hello():

print("Hello...")

await asyncio.sleep(2) # simulate waiting (like an API call)

print("...World!")

async def main():

# Run tasks concurrently

await asyncio.gather(

say_hello(),

say_hello(),

say_hello()

)

if __name__ == "__main__":

start = time.time()

asyncio.run(main())

print(f"Finished in {time.time() - start:.2f} seconds")Example: Download the simulation

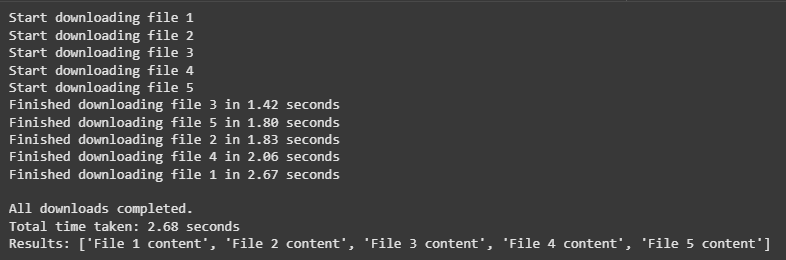

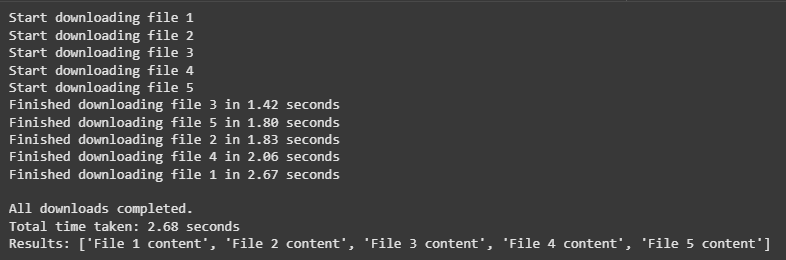

Imagine that you need to download several files. Every download takes time, but during this time your program can work on other downloads, not idle.

import asyncio

import random

import time

async def download_file(file_id: int):

print(f"Start downloading file {file_id}")

download_time = random.uniform(1, 3) # simulate variable download time

await asyncio.sleep(download_time) # non-blocking wait

print(f"Finished downloading file {file_id} in {download_time:.2f} seconds")

return f"File {file_id} content"

async def main():

files = (1, 2, 3, 4, 5)

start_time = time.time()

# Run downloads concurrently

results = await asyncio.gather(*(download_file(f) for f in files))

end_time = time.time()

print("\nAll downloads completed.")

print(f"Total time taken: {end_time - start_time:.2f} seconds")

print("Results:", results)

if __name__ == "__main__":

asyncio.run(main())

- All downloads start almost simultaneously, as shown in the “Start Download File x” line, appearing one after another immediately.

- Each file takes a different time to “download” (simulated with asyncio.sleep()), so they are done at different times – File 3 is done first in 1.42 seconds and last in 2.67 seconds.

- Since all downloads run simultaneously, the total time required is roughly equal to the longest single download time (2.68 seconds), rather than the sum of all times.

This proves the power of Asyncio – when tasks involve waiting, they can be completed in parallel, greatly improving efficiency.

Using Asyncio in AI applications with LLM

Now that we understand how Asyncio works, let’s apply it to real-world AI examples. Large language models (LLMs) (such as OpenAI’s GPT model) often involve multiple API calls, each of which takes time to complete. If we answer these calls one by one, we waste precious time waiting for a response.

In this section, we will use the OpenAI client to compare multiple tips for using and not using Asyncio. We will use 15 short tips to clearly demonstrate the performance differences. Check The complete code is here.

import asyncio

from openai import AsyncOpenAI

import os

from getpass import getpass

os.environ('OPENAI_API_KEY') = getpass('Enter OpenAI API Key: ')

import time

from openai import OpenAI

# Create sync client

client = OpenAI()

def ask_llm(prompt: str):

response = client.chat.completions.create(

model="gpt-4o-mini",

messages=({"role": "user", "content": prompt})

)

return response.choices(0).message.content

def main():

prompts = (

"Briefly explain quantum computing.",

"Write a 3-line haiku about AI.",

"List 3 startup ideas in agri-tech.",

"Summarize Inception in 2 sentences.",

"Explain blockchain in 2 sentences.",

"Write a 3-line story about a robot.",

"List 5 ways AI helps healthcare.",

"Explain Higgs boson in simple terms.",

"Describe neural networks in 2 sentences.",

"List 5 blog post ideas on renewable energy.",

"Give a short metaphor for time.",

"List 3 emerging trends in ML.",

"Write a short limerick about programming.",

"Explain supervised vs unsupervised learning in one sentence.",

"List 3 ways to reduce urban traffic."

)

start = time.time()

results = ()

for prompt in prompts:

results.append(ask_llm(prompt))

end = time.time()

for i, res in enumerate(results, 1):

print(f"\n--- Response {i} ---")

print(res)

print(f"\n(Synchronous) Finished in {end - start:.2f} seconds")

if __name__ == "__main__":

main()The synchronous version handles all 15 prompts, so the total time is the sum of each request duration. Since each request takes time to complete, the entire run time is longer – 49.76 In this case, a few seconds. Check The complete code is here.

from openai import AsyncOpenAI

# Create async client

client = AsyncOpenAI()

async def ask_llm(prompt: str):

response = await client.chat.completions.create(

model="gpt-4o-mini",

messages=({"role": "user", "content": prompt})

)

return response.choices(0).message.content

async def main():

prompts = (

"Briefly explain quantum computing.",

"Write a 3-line haiku about AI.",

"List 3 startup ideas in agri-tech.",

"Summarize Inception in 2 sentences.",

"Explain blockchain in 2 sentences.",

"Write a 3-line story about a robot.",

"List 5 ways AI helps healthcare.",

"Explain Higgs boson in simple terms.",

"Describe neural networks in 2 sentences.",

"List 5 blog post ideas on renewable energy.",

"Give a short metaphor for time.",

"List 3 emerging trends in ML.",

"Write a short limerick about programming.",

"Explain supervised vs unsupervised learning in one sentence.",

"List 3 ways to reduce urban traffic."

)

start = time.time()

results = await asyncio.gather(*(ask_llm(p) for p in prompts))

end = time.time()

for i, res in enumerate(results, 1):

print(f"\n--- Response {i} ---")

print(res)

print(f"\n(Asynchronous) Finished in {end - start:.2f} seconds")

if __name__ == "__main__":

asyncio.run(main())

The asynchronous version handles all 15 prompts at the same time, starting them almost at the same time, rather than one by one. As a result, the total run time is close to the time of the slowest single request – 8.25 seconds, not add all requests.

The big difference is because, in synchronous execution, each API call prevents the program from completing until it is finished, so the time adds up. In asynchronous execution using Asyncio, API calls run in parallel, allowing the program to process many tasks while waiting for a response, greatly reducing the total execution time.

Why this matters in AI applications

In real-world AI applications, waiting for each request to complete before starting the next request can quickly become a bottleneck, especially when dealing with multiple queries or data sources. This is especially common in workflows:

- Generate content for multiple users at the same time – for example, a chatbot, a recommendation engine, or a multi-user dashboard.

- Call LLM several times in a workflow, for example for summary, improvement, classification, or multi-step reasoning.

- Get data from multiple APIs – for example, combining LLM output with information from vector databases or external APIs.

In these cases, using Asyncio brings huge benefits:

- Improve performance – By making parallel API calls instead of waiting for each call in sequence, your system can handle more work in less time.

- Cost-efficiency – Faster execution speeds can reduce operational costs and batch requests where possible can further optimize the use of paid APIs.

- A better user experience – Concurrency makes the application feel faster, which is crucial for real-time systems like AI assistants and chatbots.

- Scalability – Asynchronous mode allows your application to handle more simultaneous requests without increasing resource consumption proportionally.

Check The complete code is here. Check out ours anytime Tutorials, codes and notebooks for github pages. Also, please stay tuned for us twitter And don’t forget to join us 100K+ ml reddit And subscribe Our newsletter.

I am a civil engineering graduate in Islamic Islam in Jamia Milia New Delhi (2022) and I am very interested in data science, especially neural networks and their applications in various fields.

🔥 (Recommended Reading) NVIDIA AI Open Source VIPE (Video Pose Engine): a powerful and universal 3D video annotation tool for spatial AI

1005 Alcyon Dr Bellmawr NJ 08031

1005 Alcyon Dr Bellmawr NJ 08031