Salesforce AI research release CODA-1.7Ba diffusion-based code language model, which passes Use two-way context to deprive the entire sequence,renew Multiple tokens in parallel Instead of the next prediction from left to right. The research team published according to and instruct Checkpointing and end-to-end training/evaluation/service stack.

Understand architecture and training

CODA adapts to 1.7B parameter backbone Discrete diffusion For text: Masked sequences are divided using iteratively with full sequences, realizing local padding and non-automatic recollection decoding. Model card document Three-stage pipeline (By bidirectional masking, supervised training after training, and progressive downgrade when inference) and reproducible scripts for TPU pre-training, GPU fine-tuning and evaluation.

Key features that surface in the distribution:

- Two-way context By diffusion denoising (no fixed generation order).

- Confidence-guided sampling (Entropy Decoding) with transaction quality and speed.

- Open training pipeline Use deployment scripts and CLI.

How do they perform on benchmarks?

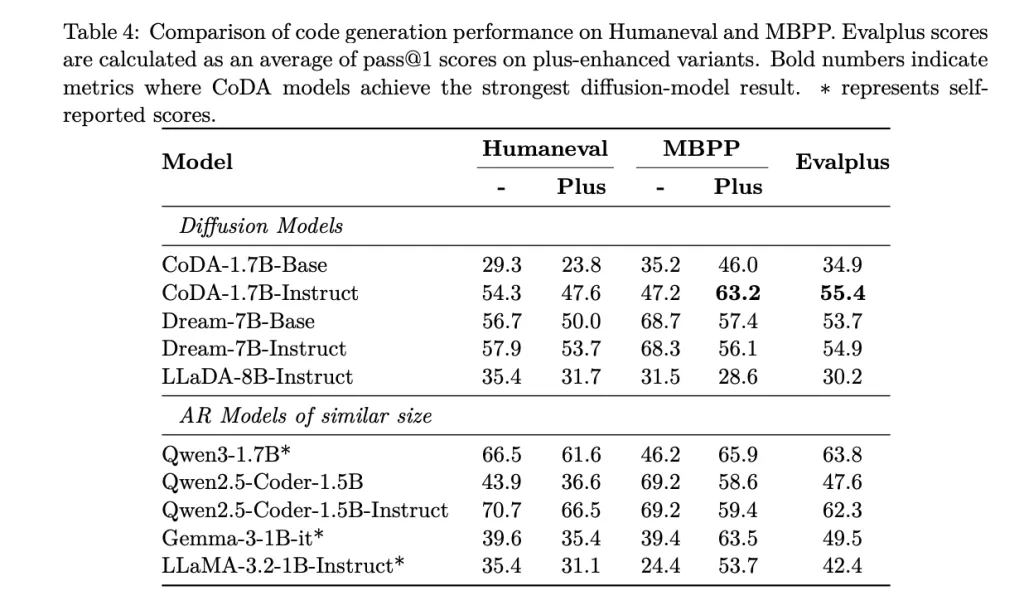

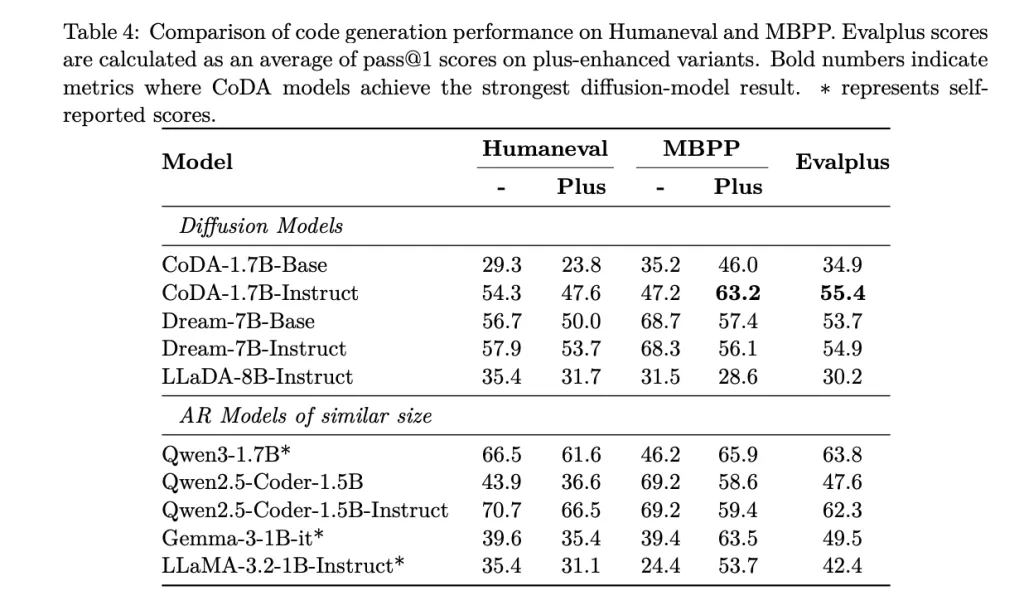

On the standard code suite, CODA-1.7B Teaching Report: Humans are 54.3%,,,,, Human + 47.6%,,,,, MBPP 47.2%,,,,, MBPP+ 63.2%,,,,, Evaluation summary 55.4% (via @1). For context, the model card is compared with a diffusion baseline including Dream-7B instruction (57.9% of human events), indicating the ending 1.7b Footprints compete with some people 7b When using fewer parameters, diffusion models on several indicators.

Reasoning behavior

The cost of power generation is Number of diffusion steps;Cod exposed the knob, e.g. STEPS,,,,, ALG="entropy",,,,, ALG_TEMPand block length adjustment Delay/quality trade-off. Since the token is updated in parallel with all attention, CODA’s target is low Wall-type incubation period Compared to larger diffusion models, on a comparable step budget, on a small scale. (Hugging face)

Deployment and Licensing

The repository provides Fastapi server with openai compatible API and interactive CLI for local inference; description includes environment settings and start_server.sh transmitter. Model card and Hugging Face Collection Concentrate cultural relics. The checkpoint is published below CC BY-NC 4.0 Hug the face.

CODA-1.7B is a concise reference for small-scale discrete-diffusion code generation: 1.7b parameters, bidirectional Denoising has parallel token updates, and a reproducible pipeline from pre-training to SFT and SFT and services. Reported via @1 results – Humaneval 54.3, HumaneVal+ 47.6, MBPP 47.2, MBPP+ 63.2, EvalPlus Crengate 55.4 – competes with some 7b diffusion benchmarks (e.g., Dream-dream-7b HumaneVal 57.9) while using fewer parameters. Inferred delays are clearly controlled by step counting and decoding knobs (STEPSthe ,entropy formula guide), which can be used operationally to adjust throughput/quality. This version includes the weights of the hug face and the FastAPI server/CLI for local deployment.

Check Paper, github repository and Model embracing face. Check out ours anytime Tutorials, codes and notebooks for github pages. Also, please stay tuned for us twitter And don’t forget to join us 100K+ ml reddit And subscribe Our newsletter. wait! Are you on the telegram? Now, you can also join us on Telegram.

Asif Razzaq is CEO of Marktechpost Media Inc. As a visionary entrepreneur and engineer, ASIF is committed to harnessing the potential of artificial intelligence to achieve social benefits. His recent effort is to launch Marktechpost, an artificial intelligence media platform that has an in-depth coverage of machine learning and deep learning news that can sound both technically, both through technical voices and be understood by a wide audience. The platform has over 2 million views per month, demonstrating its popularity among its audience.

🙌Follow Marktechpost: Add us as the preferred source on Google.

1005 Alcyon Dr Bellmawr NJ 08031

1005 Alcyon Dr Bellmawr NJ 08031