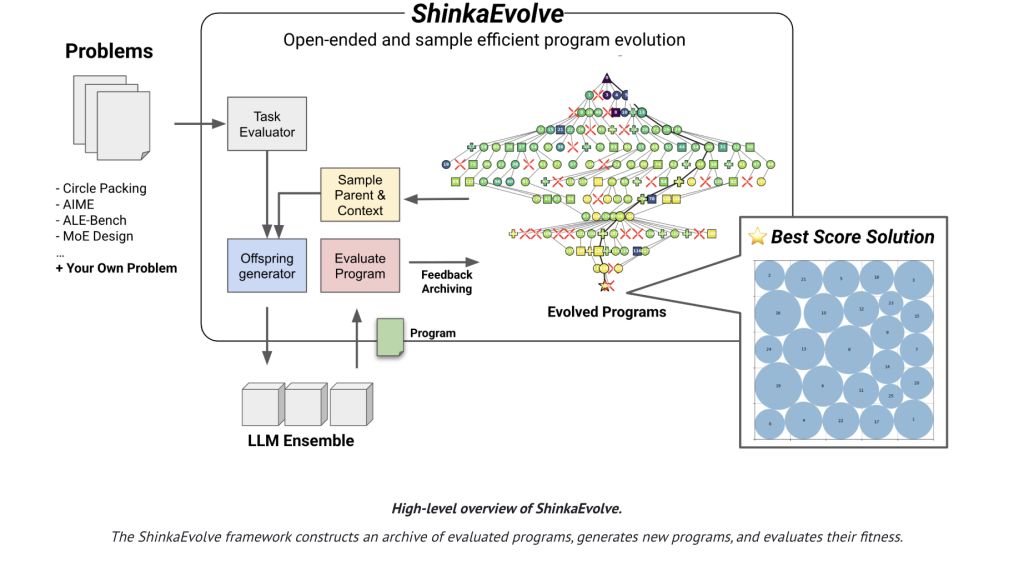

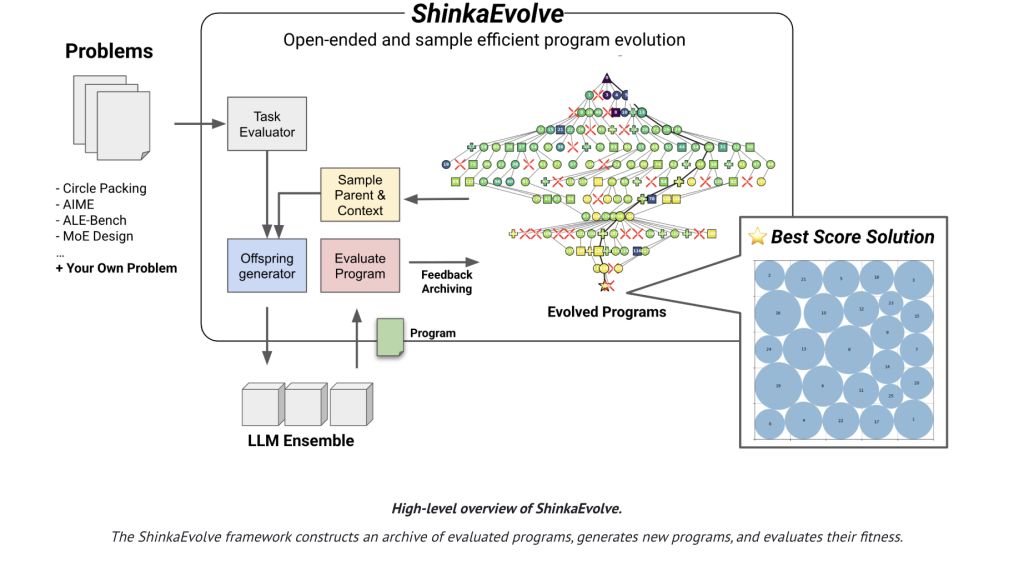

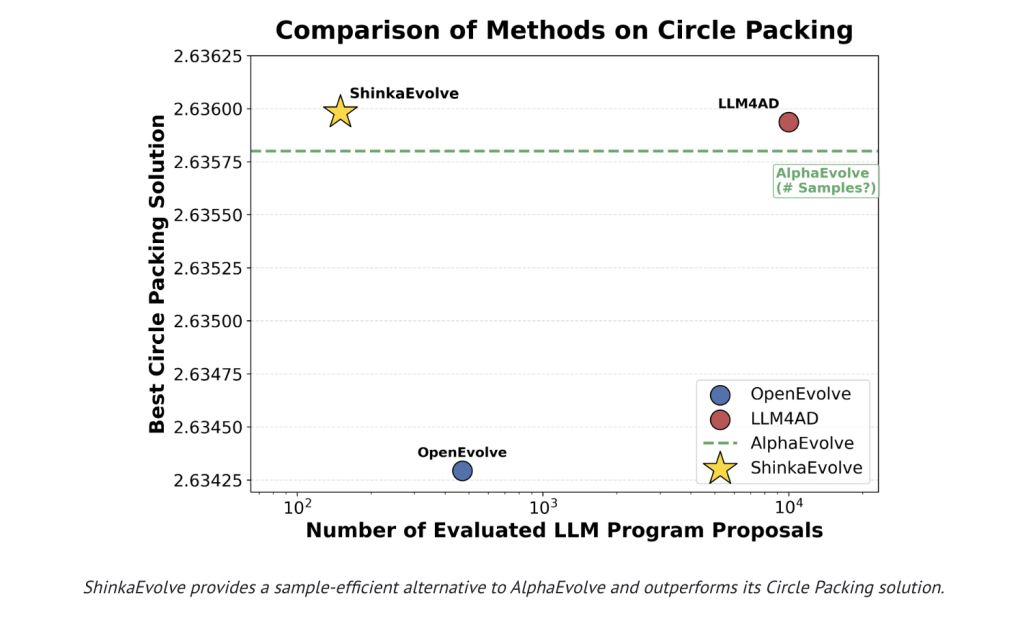

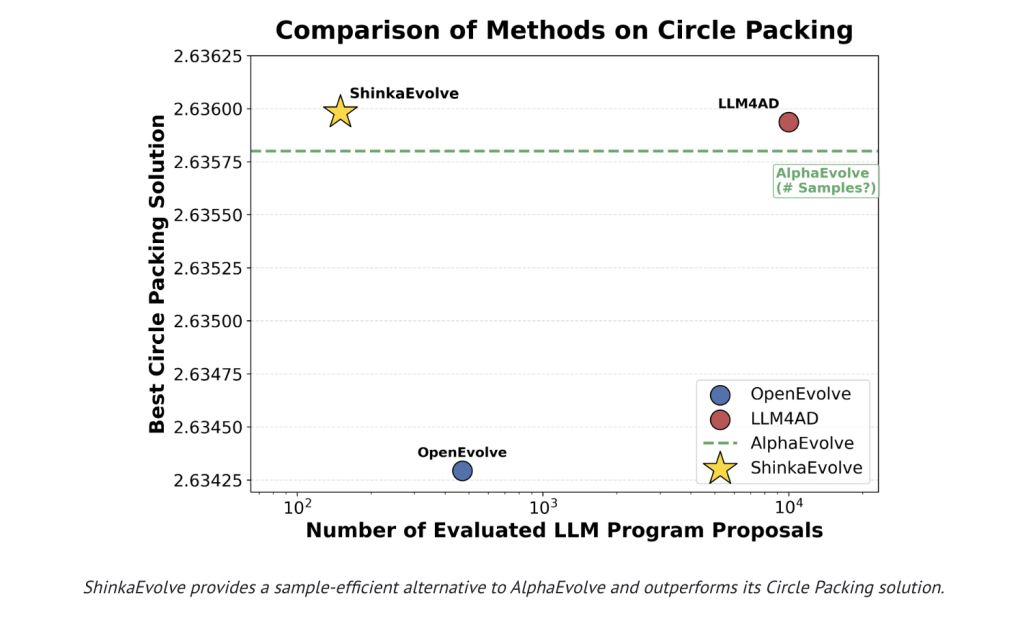

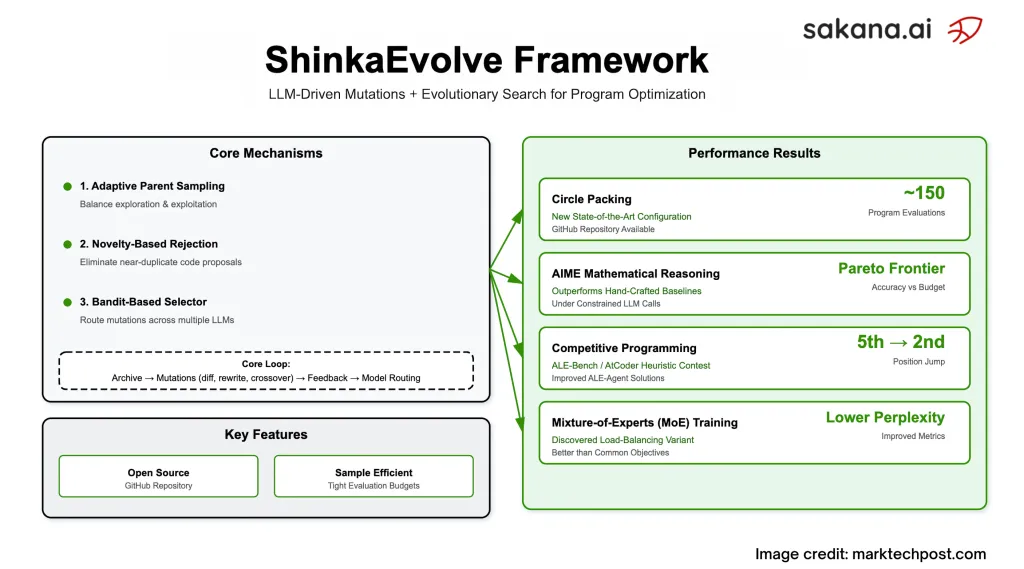

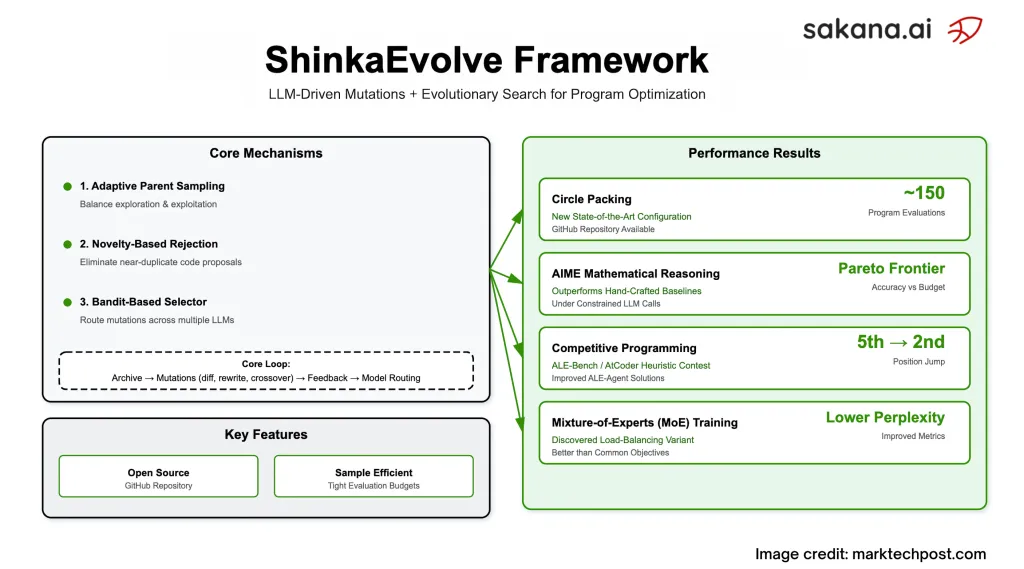

Sakana AI has been released Shinkaevolvean open source framework that uses the Big Language Model (LLM) as a mutation operator in the evolutionary cycle Evolutionary Program For scientific and engineering problems, although the number of evaluations required to achieve a strong solution is greatly reduced. In terms of specification Circle packaging Benchmark (n = 26 in unit square), Shinkaevolve reports a new SOTA configuration ~150 program evaluationsprevious systems were usually burned Thousands of. The project is Apache-2.0with research reports and public regulations.

What problem does it actually solve?

Most “agent” code evolution systems explore through brute force: they mutate code, run, score and repeat – consuming a huge sampling budget. Shinkaevolve’s goal is clear and wasted Three interactive components:

- Adaptive parental sampling Balanced exploration/exploitation. Parents draw from the “island” through fitness and novel policies (power law or weighted by performance and offspring counts), rather than always best climbing the current best.

- Based on novel rejection filtering To avoid reevaluating near replicates. The variable code segment is embedded; if the cosine similarity exceeds the threshold, the secondary LLM is a “novel judge” before execution.

- Bandit-based LLM combination So the system understands which model (e.g., GPT/Gemini/Claude/DeepSeek family) is producing the largest Relative Fitness jumps and routes accordingly will be subject to future mutations (UCB1 updates to improve parent/baseline).

Are sample efficiency claims outweigh the toy problem?

The research team evaluated four different areas and showed consistent benefits with smaller budgets:

- Round packaging (n = 26): roughly achieved improved configuration 150 Assessment; the research team also verified through stricter precise structure inspections.

- AIME Mathematical Reasoning (2024 Set):evolution Agent scaffolding Then figure it out Pareto border (Accuracy with LLM Call Budget), at the limited query budget/Pareto frontier, accuracy with call with call and transfer to other AIME years and LLMS ranges outperform hand-built baselines.

- Competitive Programming (Ale-Bench Lite):from Beer Agent Solutions provided by Shinkaevolve ~2.3% average improvement Spread over 10 tasks and drive from one task’s solution Fifth→Second Counterfact in the Atcoder rankings.

- LLM Training (Mixture of Experts):evolution New load balancing loss Compared with the widely used global batch LBL, confusion and downstream accuracy are improved under multiple regularization intensity.

Actually, how does the evolutionary cycle look like?

Shinkaevolve maintains a file Evaluation procedures with fitness, public indicators and text feedback. Each generation: sample island and parents; construct mutational environments using TOP-K and random “inspiration” programs; then edited by three operators –Difference Edit,,,,, Complete rewriteand LLM-guided cross-border– And use clear markers to protect the unchanged code area. The execution candidates are updated simultaneously with the Robber Statistics Turn to subsequent LLM/model selection. The system is generated regularly meta-scratchpad This sums up recent successful strategies; these summary are sent back to the prompts to speed offspring up.

What are the specific results?

- Round packaging:merge Structured initialization (For example, Golden Horn Mode), Mixed Global – Local Search (Simulated Annealing + SLSQP) and Escape mechanism (temperature heating, ring rotation) is discovered by the system, not encoded a priori.

- Aime scaffolding: A collection of three-stage experts (generate → critical peer review → synthesis), achieving the best position of accuracy/cost when ~7 calls, while maintaining robustness when replaced with a different LLM backend.

- Beer board: Targeted engineering wins (e.g., cache KD-Tree subtree statistics; “target edge moves” to misclassified items), which drive scores without wholesale rewrites.

- Cute loss: Added an inadequate fine for entropy regulation to the global batch target; empirically speaking, with layer routing concentration, missed routes are reduced by experience and confusion/benchmarks are improved.

How does this compare to Alphaevolve and related systems?

Alphaevolve shows strong closed source results, but at higher evaluation counts. Shinkaevolve Breed and surpass Circle results Small sample size and publish all components Open source. The research team also compared variants (single mode vs fixed ensemble vs. VS. Bandits) and irrigation Parent selection and Novel filteringshowing each contributes to the observed efficiency.

Summary

Shinkaevolve is a Apache-2.0 frame LLM-driven program evolution This cuts the assessment Thousands of arrive Hundreds By combining Fitness/French Parental Sampling,,,,, Embedding – plus-llm novelty repulsion,one UCB1 style adaptive LLM ensemble. It sets up a New War exist Round packaging (~150 evals), found stronger Aime Strictly check the scaffolding under the budget and improve Beer board Solution (~2.3% average gain, fifth → 2nd task), find one New MUE load balancing loss This improves confusion and downstream accuracy. The code and reports are public.

FAQ – Shinkaevolve

1) What is Shinkaevolve?

An open source framework that combines LLM-driven program mutations with evolutionary search to automate algorithm discovery and optimization. The code and reports are public.

2) How to obtain higher sample efficiency than previous evolutionary systems?

Three mechanisms: adaptive parent sampling (exploration/utilization balance), novelty-based rejection to avoid repeated evaluation, and robber-based selectors that route mutations to the most promising LLM.

3) What support results?

It can reach state-of-the-art circle packaging and perform about 150 evaluations; on Aime-2024, it evolved scaffolding under 10 questions per question; it improves the beer-based solution with a strong benchmark.

4) Where can I run it and what is a license?

The GitHub repository provides a WebUI and example; Shinkaevolve is released under Apache-2.0.

Check Technical details,,,,, Paper and Github page. Check out ours anytime Tutorials, codes and notebooks for github pages. Also, please stay tuned for us twitter And don’t forget to join us 100K+ ml reddit And subscribe Our newsletter.

Asif Razzaq is CEO of Marktechpost Media Inc. As a visionary entrepreneur and engineer, ASIF is committed to harnessing the potential of artificial intelligence to achieve social benefits. His recent effort is to launch Marktechpost, an artificial intelligence media platform that has an in-depth coverage of machine learning and deep learning news that can sound both technically, both through technical voices and be understood by a wide audience. The platform has over 2 million views per month, demonstrating its popularity among its audience.

🔥 (Recommended Reading) NVIDIA AI Open Source VIPE (Video Pose Engine): a powerful and universal 3D video annotation tool for spatial AI

1005 Alcyon Dr Bellmawr NJ 08031

1005 Alcyon Dr Bellmawr NJ 08031