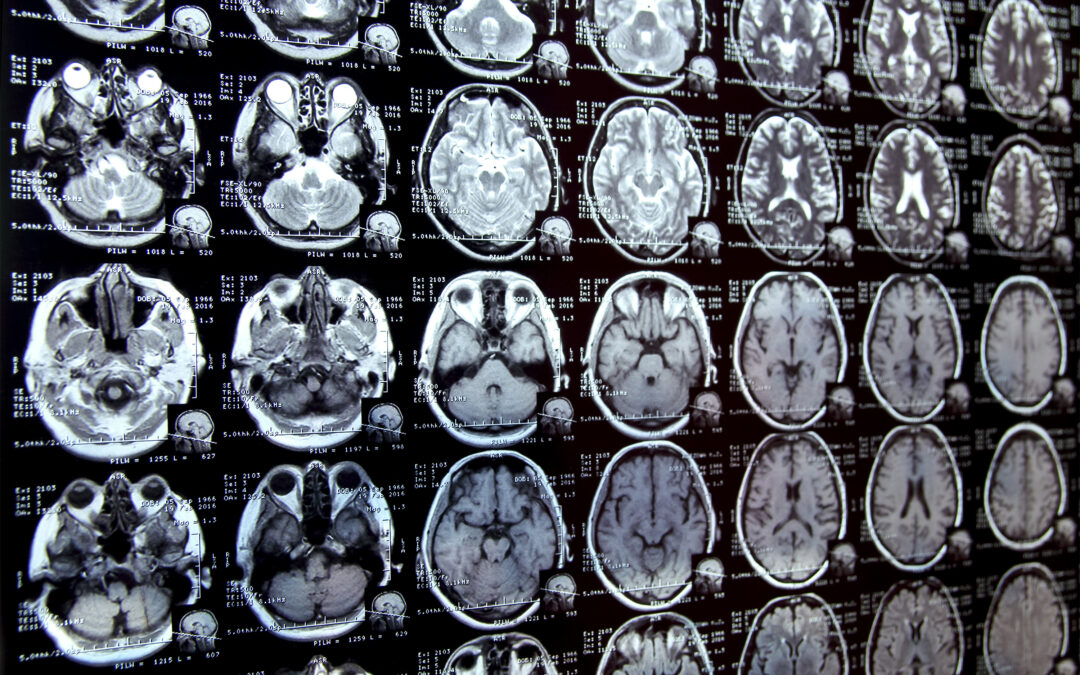

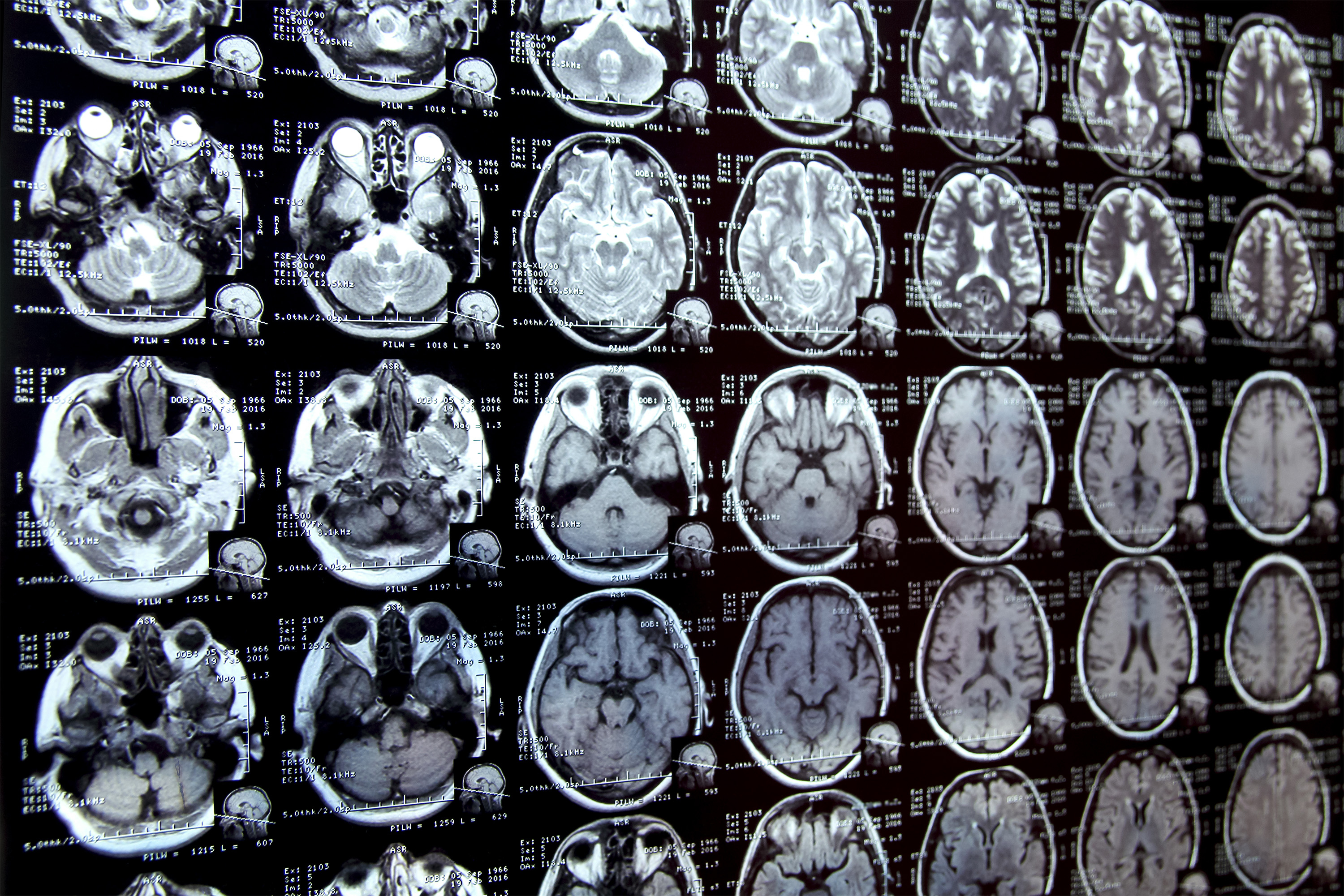

Annotated areas of interest in medical images are a process called segmentation, often the first step clinical researchers take when conducting new research involving biomedical images.

For example, to determine how the size of the brain hippocampus changes as the patient is aged, scientists first outlined each hippocampus in a series of brain scans. For many structures and image types, this is often a manual process and can be very time consuming, especially when studying the challenges of the area.

To simplify the process, MIT researchers have developed an AI-based system that allows researchers to quickly segment new biomedical imaging datasets by clicking, graffiti, and drawing boxes on images. This new AI model uses these interactions to predict segmentation.

When users tag other images, the number of interactions they need to perform decreases, eventually falling to zero. The model can then accurately subdivides each new image without user input.

This is done because the architecture of the model is specially designed to make new predictions using information in the already segmented images.

Unlike other medical image segmentation models, the system allows users to segment the entire dataset without repeating the work of each image.

Additionally, interactive tools do not require pre-trained image datasets for training, so users do not require machine learning expertise or extensive computing resources. They can use the system for new segmentation tasks without retraining the model.

In the long run, the tool can accelerate research on new treatments and reduce the cost of clinical trials and medical research. Doctors can also use it to increase the efficiency of clinical applications, such as radiation therapy programs.

“Many scientists may have only time to do some images every day for research, because manual image segmentation is very time-consuming. We hope that due to the lack of effective tools, the system will enable new science by allowing clinical researchers to conduct previous research.”

Jose Javier Gonzalez Ortiz PhD ’24 added to the paper. John Guttag, Dugald C. Jackson professor of computer science and electrical engineering; assistant professor at Harvard Medical School and MGH, and a research scientist at the MIT Computer Science and Artificial Intelligence Laboratory (CSAIL). The study will be presented at the International Conference on Computer Vision.

Simplify segmentation

The researchers mainly use two methods to subdivide new sets of medical images. Through interactive segmentation, they input images into the AI system and use interfaces to mark areas of interest. The model predicts segmentation based on these interactions.

ScribblePrompt, a tool previously developed by MIT researchers, allows users to do this, but they have to repeat the process for each new image.

Another approach is to develop task-specific AI models to automatically segment images. This approach requires the user to manually segment hundreds of images to create datasets and then train the machine learning model. This model predicts the segmentation of new images. But users have to start a complex machine learning-based process for each new task from scratch and if they make mistakes, they cannot correct the model.

This new system, MultiverSeg, combines the best of each method. It can predict the segmentation of new images based on user interactions (such as doodles), but also keeps the images of each segment in the context of later references.

When a user uploads a new image and marks the area of interest, the model will be borrowed in the example of its context settings to make more accurate predictions, and the user inputs are less.

The researchers designed the architecture of the model to use any size of context, so the user does not need to have a certain number of images. This gives Multiverseg the flexibility to use in a range of applications.

“At some point, for many tasks, you don’t need to provide any interaction. If you have enough examples in the context, the model can accurately predict segmentation on its own,” Wong said.

The researchers carefully designed and trained the model to various sets of biomedical imaging data to ensure that it can gradually improve its predictions based on user input.

Users do not need to retrain or customize their data model. To use MultiverSeg for a new task, you can upload a new medical image and start tagging it.

When researchers compared colorful devices with the latest tools for diaphragm and interactive image segmentation, it performed better than each baseline.

Less clicks, better results

Unlike these other tools, each image required by Multivereg requires less user input. With the ninth new image, it requires only two clicks on the user to generate segments more accurately than a model designed specifically for the task.

For some image types (such as X-rays), users may only need to manually segment one or two images before making predictions on their own.

The interactiveness of the tool also enables users to correct the predictions of the model until they reach the desired level of accuracy. Compared to the researchers’ previous systems, MultiverSeg achieves 90% accuracy, about 2/3 of the number of doodles and 3/4 of the number of clicks.

“With MultiverSeg, users can always provide more interaction to perfect AI predictions. This can still speed up the process considerably, because it is usually much faster than something that starts from scratch,” Wong said.

Going forward, researchers hope to test this tool in reality with clinical collaborators and improve it based on user feedback. They also hope to enable Multivereg to segment 3D biomedical images.

Hardware support from the Massachusetts Center for Life Sciences, Quanta Computer, Inc. and part of the National Institutes of Health support this work.

1005 Alcyon Dr Bellmawr NJ 08031

1005 Alcyon Dr Bellmawr NJ 08031