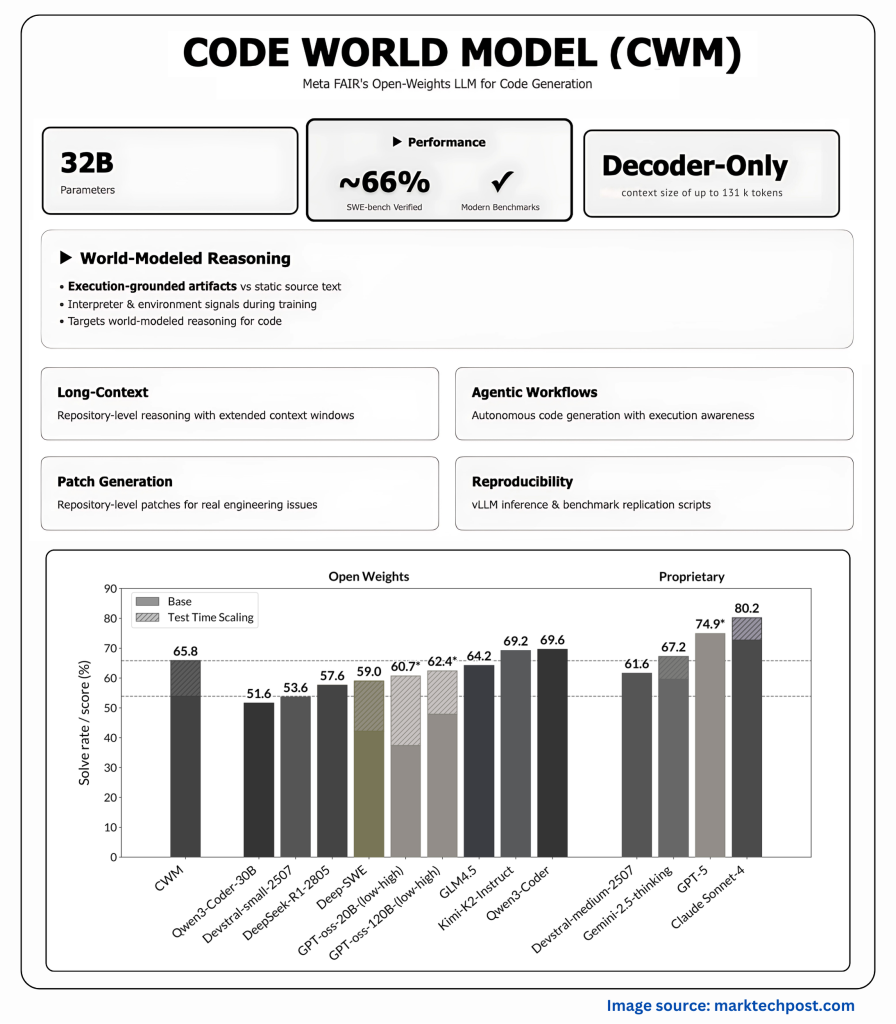

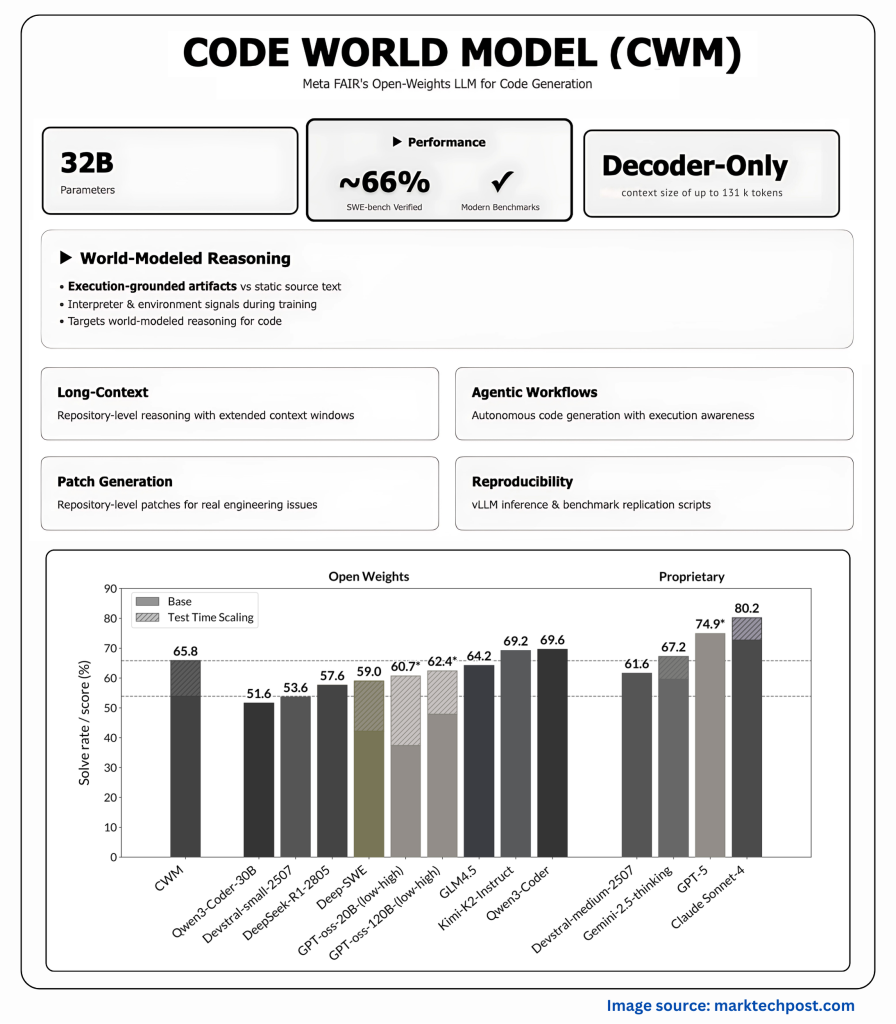

Yuan Expo released Code World Model (CWM)an injection of 32 billion parameters dense decoder only llm World Modeling More than just static source texts by training on execution trajectories and long horse agent-environment interactions.

New Feature: Learn Code Predictive execution?

Mid-term training of two large family of observational trajectories in CWM: (1) Python interpreter traces This record records the local variable status after each execution line, (2) Proxy interaction in Dockerized repository Capture editing, shell commands and test feedback. This foundation is designed to teach semantics (how states develop), not just grammar.

To expand the collection, the research team established Executable repository images Multi-step trajectory from thousands of GitHub projects and foraging through software engineering agents (“forageragents”). Release a report ~3M track Cross ~10K images and 3.15K repositories, fixed and release fixed variants with mutations.

Model and context windows

CWM is a Dense, decoder-only transformer (No cute) and 64th floor,,,,, Target (48Q/8KV),,,,, Swiglu,,,,, rmsnormand Scale rope. Pay attention to alternating Local 8K and Global 131k Sliding window block, enable 131K token Effective background; training uses documents to create masking.

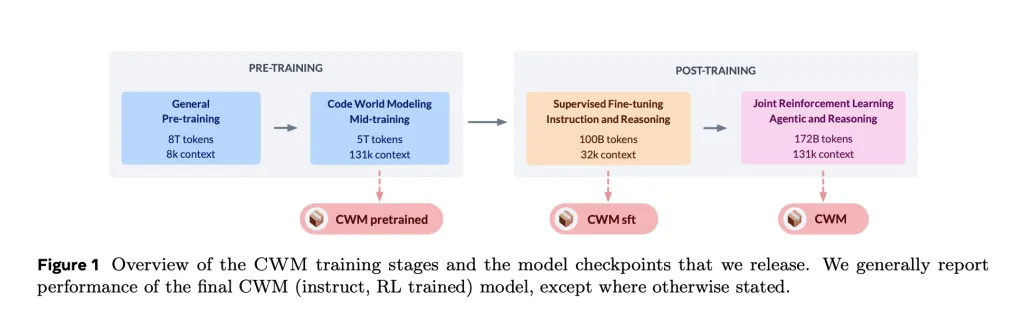

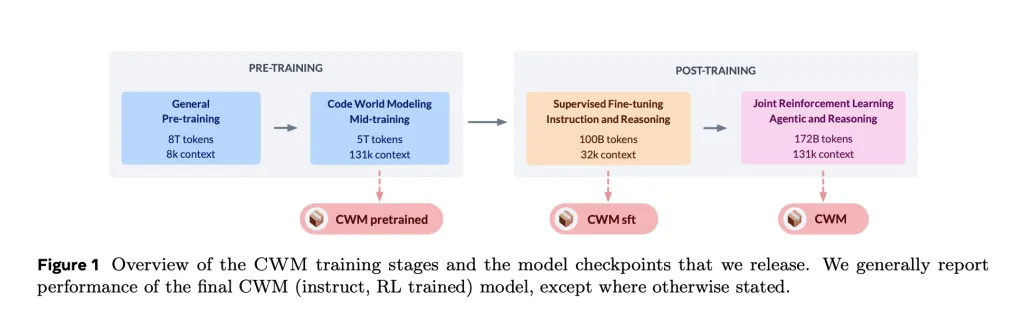

Training formula (PRE→MID→POST)

- General training: 8T token (heavy duty) in 8K context.

- Mid-term training: +5T token, long posts (131K) with Python execution trajectory, lounging data, PR-derived differences, IR/compiler, Triton kernel and lean math.

- After training: 100b token sft Do guidance + reasoning, then Multitasking RL (~172b-token) In verifiable encoding, mathematical and multi-transfer SWE environments, use GRPO algorithms and minimum tool sets (BASH/EDIT/CREATE/Commit).

- Quantitative reasoning is suitable Single 80 GB H100.

Benchmark

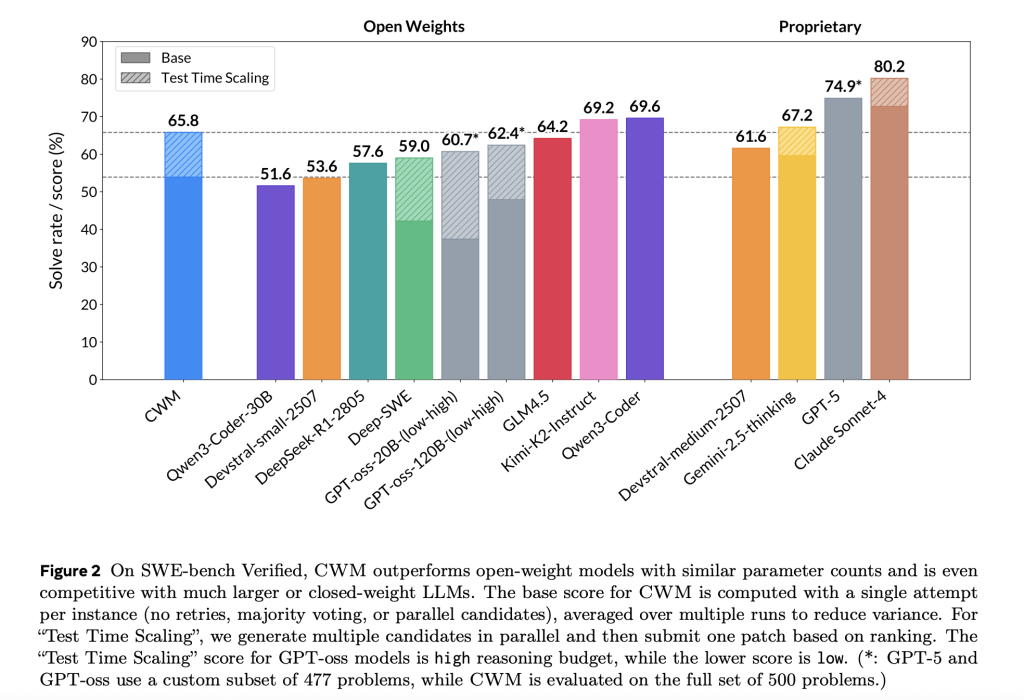

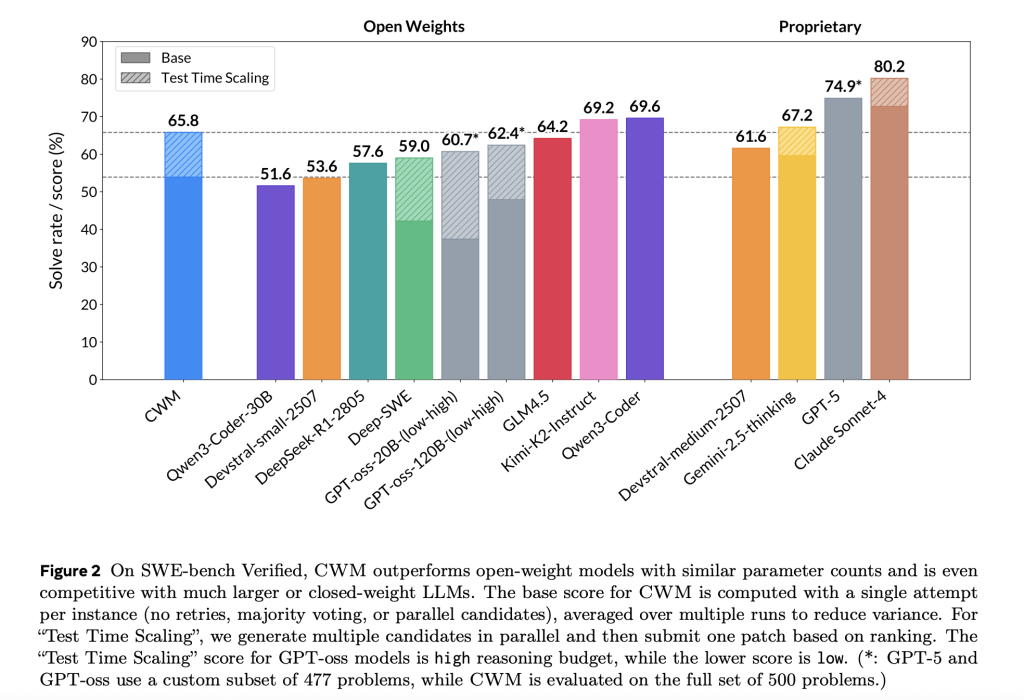

The research team cites the following By @1 / Score (Test time scaling is recorded where applicable):

- SWE bench verified: 65.8% (with test time zoom).

- LiveCodeBench-V5: 68.6%; LCB-V6: 63.5%.

- Math-500: 96.6%; Aime-24: 76.0%; Aime-25: 68.2%.

- Cruxeval Output: 94.3%.

The research team positioned CWM as a competitive advantage with similar size open baselines, even using larger or closed models on proven SWE Bench.

For the context of task design and metrics for SWE Bench validation, see the official benchmark resources.

Why is world modeling important to code?

This version emphasizes two operational functions:

- Perform tracking predictionGiven a function and trace startup, the CWM can predict stack frames (locals) and executed rows in each step through a structured format, which can be used as a “neural debugger” for grounding inference without real-time execution.

- Agent encoding: Multi-transform inference used by the tool is targeted at real repositories and verified with hidden test and patch similarity rewards; setup trains the model to locate faults and generates End-to-end patches (git diff) instead of summary.

Some notable details

- Token: The Llama-3 family has a reserved control token; the reserved ID is used to delineate trace amounts and inference segments during SFT.

- Pay attention to layout: this 3:1 Local: Global The entire depth is repeatedly intertwined; the long article says that training takes place Large token size Stable gradient.

- Calculate the scaling: The learning rate/batch size schedule is derived from an internal scaling scan tailored to novel overhead.

Summary

CWM is a pragmatic step towards basic code generation: Meta-releases 32B-density transformers with patches for execution tracking learning and test validation, releases intermediate/post-training checkpoints, and use intermediate gates in unfair non-commercial research licenses, rather than comprehensive execution of long-term compositions, build it into a useful platform instead of execution execution, and use it for execution.

Check Paper,,,,, Github pageand Model embracing face. Check out ours anytime Tutorials, codes and notebooks for github pages. Also, please stay tuned for us twitter And don’t forget to join us 100K+ ml reddit And subscribe Our newsletter.

Asif Razzaq is CEO of Marktechpost Media Inc. As a visionary entrepreneur and engineer, ASIF is committed to harnessing the potential of artificial intelligence to achieve social benefits. His recent effort is to launch Marktechpost, an artificial intelligence media platform that has an in-depth coverage of machine learning and deep learning news that can sound both technically, both through technical voices and be understood by a wide audience. The platform has over 2 million views per month, demonstrating its popularity among its audience.

🔥 (Recommended Reading) NVIDIA AI Open Source VIPE (Video Pose Engine): a powerful and universal 3D video annotation tool for spatial AI

1005 Alcyon Dr Bellmawr NJ 08031

1005 Alcyon Dr Bellmawr NJ 08031