Artificial intelligence (AI) has made considerable progress in recent years, but challenges continue to exist in achieving efficient, cost-effective and high-performance models. Developing large language models (LLMS) often requires a lot of computing resources and financial investment, which can be incredible for many organizations. Furthermore, ensuring that these models have strong inference capabilities and can be deployed effectively on consumer-grade hardware is still a barrier.

DeepSeek AI has made a major upgrade to its V3 language model by releasing the release of DeepSeek-V3-0324. Not only does this new model improve performance, but it also runs at an impressive speed 20 tokens per second On Mac Studio, a consumer-grade device. This advancement has exacerbated competition with industry leaders such as OpenAI, demonstrating DeepSeek’s commitment to making high-quality AI models more accessible and efficient.

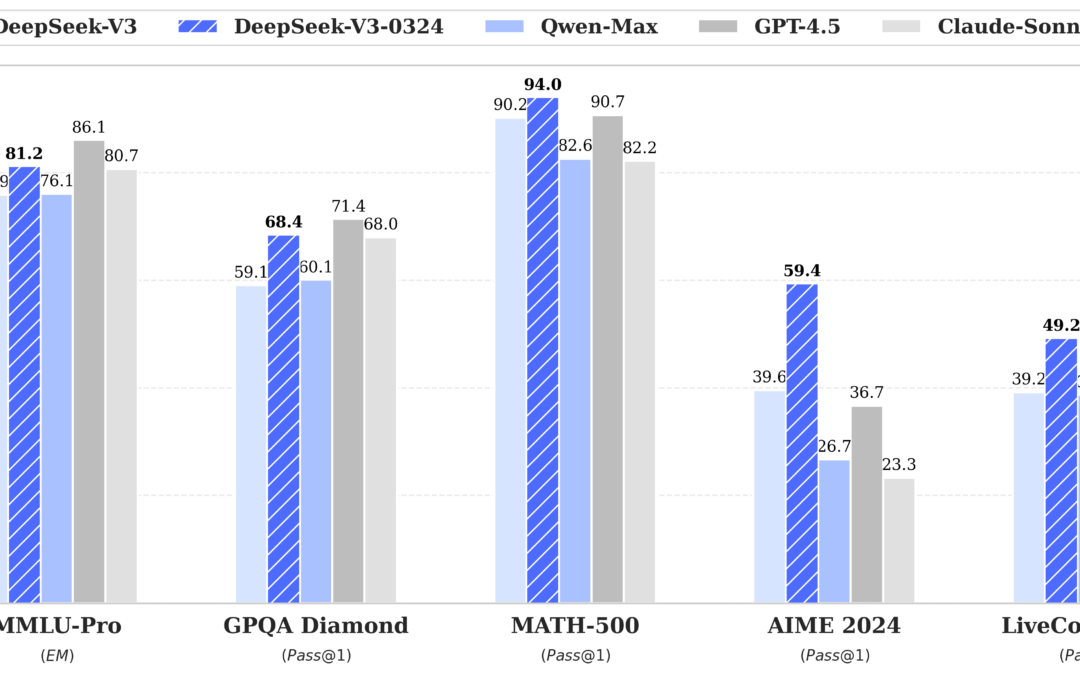

DeepSeek-V3-0324 introduces several technological improvements to its predecessor. It is worth noting that it shows significant enhancement in its inference ability, with the benchmark score showing a large increase:

- MMLU-PRO: 75.9→81.2 (+5.3)

- GPQA: 59.1→68.4 (+9.3)

- AIME: 39.6→59.4 (+19.8)

- livecodebench: 39.2→49.2 (+10.0)

These improvements suggest that understanding and handling of complex tasks is stronger. In addition, the model enhances front-end web development skills, generating more executable code and aesthetically pleasing web and game interfaces. Its Chinese writing abilities have also seen improvements, keeping pace with R1 writing style and improving the quality of medium-form content. In addition, the accuracy of function call has been improved, solving problems in previous versions.

DeepSeek-V3-0324 is released under the MIT license, highlighting the commitment of DeepSeek AI to open source collaboration to enable developers around the world to leverage and build this technology under unlimited licensing constraints. The model runs effectively on devices such as Mac Studio, with the ability to reach 20 tokens per second, reflecting its actual applicability and efficiency. This level of performance not only makes advanced AI more accessible, but also reduces reliance on expensive professional hardware, reducing barriers to entry for many users and organizations.

In short, DeepSeek-V3-0324 released by DeepSeek AI marks an important milestone in the AI landscape. By addressing key challenges related to performance, cost, and accessibility, DeepSeek positiones itself as a strong contender for established entities such as OpenAI. The technological advancement and open source availability of this model are expected to further democratize AI technology, thereby facilitating innovation and wider adoption in various sectors.

Check Model embracing face. All credits for this study are to the researchers on the project. Also, please stay tuned for us twitter And don’t forget to join us 85k+ ml reddit.

Asif Razzaq is CEO of Marktechpost Media Inc. As a visionary entrepreneur and engineer, ASIF is committed to harnessing the potential of artificial intelligence to achieve social benefits. His recent effort is to launch Marktechpost, an artificial intelligence media platform that has an in-depth coverage of machine learning and deep learning news that can sound both technically, both through technical voices and be understood by a wide audience. The platform has over 2 million views per month, demonstrating its popularity among its audience.

1005 Alcyon Dr Bellmawr NJ 08031

1005 Alcyon Dr Bellmawr NJ 08031