Understand the limitations of language model transparency

As large language models (LLMs) become the core of more and more applications, from enterprise decision support to education and scientific research, it becomes more urgent to understand their internal decisions. The core challenge remains: How do we determine where the model’s response comes from? Most LLMs are trained on large-scale datasets made up of trillions of tokens, but there are no practical tools to map model output back to the data that shapes them. This opacity complicates efforts to assess trustworthiness, track the origin of facts, and study potential memories or biases.

Olmotrace – A tool for real-time output tracking

Allen AI Institute (AI2) recently introduced OmotrasThe system is designed to trace the segments of responses generated by LLM in real time. The system is built on top of the AI2 open source Olmo model and provides an interface to identify verbatim overlap between generated text and documents used during model training. Unlike retrieval-based generation (RAG) methods that inject external contexts during inference, Olmotrace is designed for post-interpretation, which identifies the link between model behavior and prior exposure during training.

Olmotrace has been integrated into the AI2 playground, where users can check specific spans in the LLM output, view matching training documents, and check these documents in the extended context. The system supports Olmo models, including Olmo-2-32B-Instruct, and utilizes its complete training data – 46 trillion tokens in 3.2 billion documents.

Technical architecture and design considerations

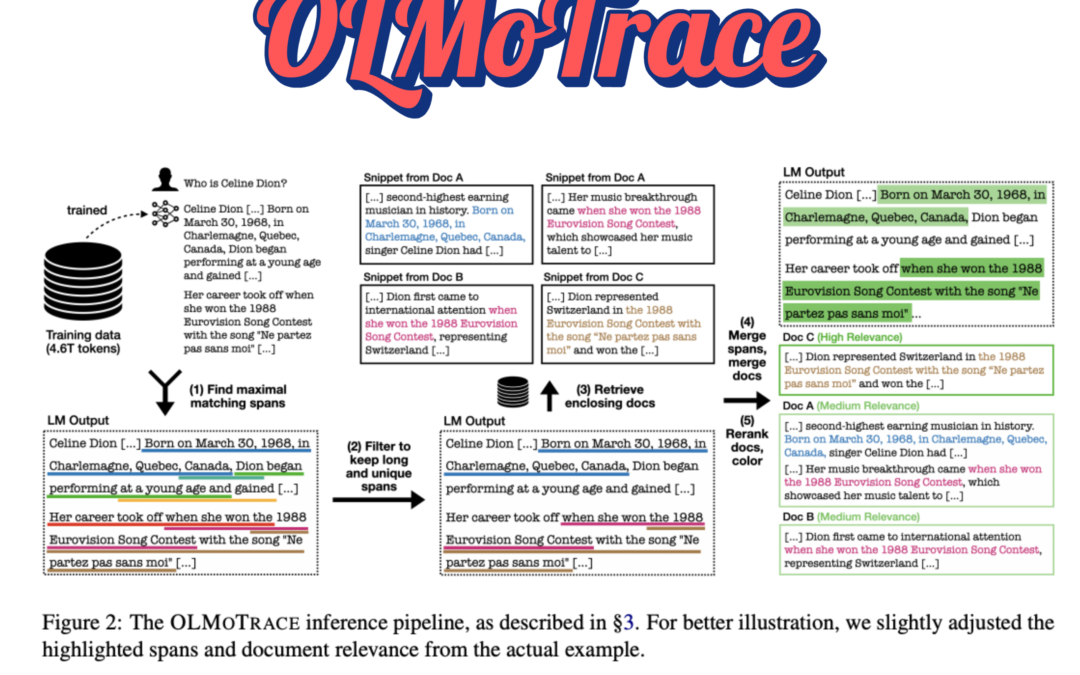

The core of Olmotrace is Infini-gramis an index and search engine built for the extreme text corpus. The system uses a structure based on a suffix array to effectively search for the exact span in the model output in the training data. The core reasoning pipeline consists of five stages:

- Span Identification: Extract all maximum spans from the output of the model, which matches the verbatim sequence in the training data. The algorithm avoids spanning incomplete, overly general or nested spans.

- Span filtering: Span of spans based on “Span Umigram Probability”, which prioritizes longer and fewer phrases as informative agents.

- File Retrieval: For each span, the system retrieves up to 10 related documents containing phrases, balance accuracy and runtime.

- merge: Merge overlapping spans and duplicates to reduce redundancy in the user interface.

- Related rankings: Apply the BM25 score to rank the retrieved documents based on their similarity to the original prompt and response.

The design ensures that the tracking results are not only accurate, but also surfaced during the average latency of 450 models outputs. All processing is performed on a CPU-based node and an SSD is used to accommodate large index files with low latency access.

Evaluation, Insights and Use Cases

AI2 uses 98 LLM generated conversations for internal usage dialogues for benchmarking. Document relevance was scored by human annotators and model-based “LLM-AS-AA-Gudge” evaluators (GPT-4O). The average correlation score for the highest retrieved documents was 1.82 (in the ratio of 0-3), and the average of the first 5 documents was 1.50, indicating a reasonable alignment between the model output and the retrieved training environment.

Three illustrative use cases demonstrate the practicality of the system:

- Fact proof: Users can determine whether it is possible to remember the fact statement from the training data by checking their source documentation.

- Creative expression analysis: Even seemingly novel or stylized languages (e.g., Tolkien-like wording) can sometimes be traced back to fan novels or literary samples in the training corpus.

- Mathematical reasoning:olmotrace can surface-comply with exact matches of examples of symbolic calculation steps or structured problems, thus clarifying how LLMS learns mathematical tasks.

These use cases highlight the actual value of tracking model output to train data to understand memory, data source, and generalized behavior.

Impact on open models and model review

Olmotrace emphasizes the importance of transparency in LLM development, especially for open source models. While the tool only exhibits vocabulary matching rather than causality, it provides a concrete mechanism to study how language models reuse training material. This is especially important in the context of compliance, copyright audit or quality assurance.

The system’s open source foundation is built under the Apache 2.0 license and further exploration is also invited. Researchers can extend it to approximate matching or impact-based technologies, while developers can integrate it into a wider pipeline of LLM evaluations.

In landscapes where model behavior is often opaque, Olmotrace sets precedent for checkable, data-grounded LLMS – improving standards for transparency in model development and deployment

Check Paper and playground. All credits for this study are to the researchers on the project. Also, please feel free to follow us twitter And don’t forget to join us 85k+ ml reddit. notes:

Asif Razzaq is CEO of Marktechpost Media Inc. As a visionary entrepreneur and engineer, ASIF is committed to harnessing the potential of artificial intelligence to achieve social benefits. His recent effort is to launch Marktechpost, an artificial intelligence media platform that has an in-depth coverage of machine learning and deep learning news that can sound both technically, both through technical voices and be understood by a wide audience. The platform has over 2 million views per month, demonstrating its popularity among its audience.

1005 Alcyon Dr Bellmawr NJ 08031

1005 Alcyon Dr Bellmawr NJ 08031