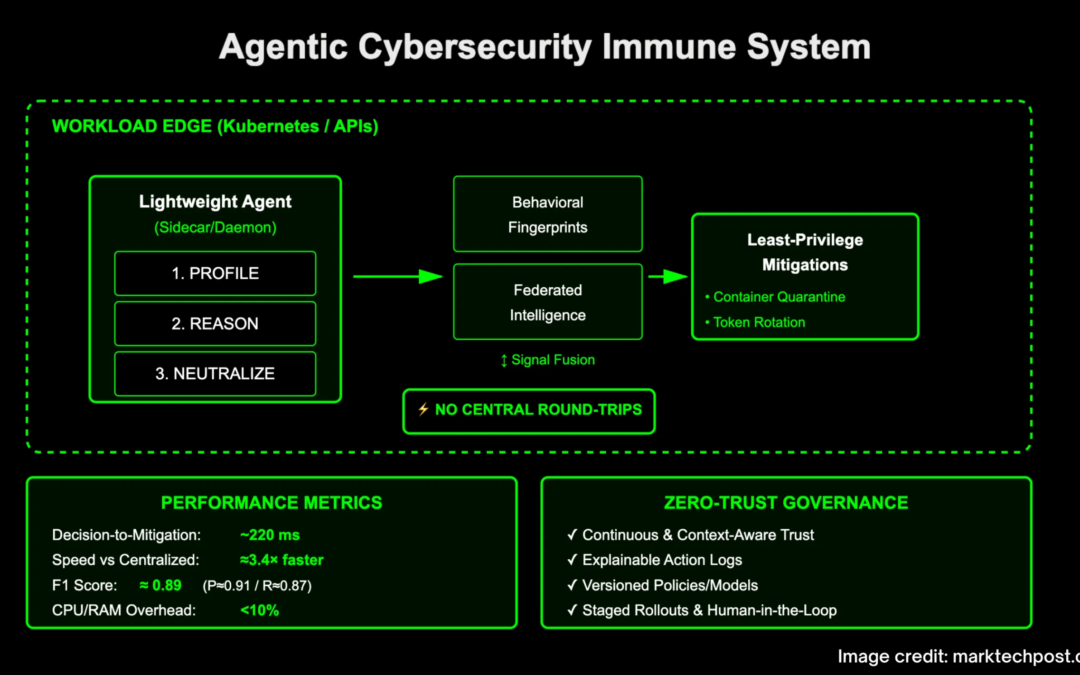

Is your AI security stack profile, cause and neutralizing ~220ms on-site security threats OK – no central round trip? Researchers from Google and Little Rock at the University of Arkansas form a proxy cybersecurity “immune system” consisting of lightweight, autonomous Sidecar AI agents and workloads (Kubernetes Pods, API Gateways, Edge Services). Instead of classifiers that export RAW telemetry to SIEM and wait for batch processing, each agent learns local behavior benchmarks, uses federated intelligence to evaluate exceptions, and applies minimally escaping mitigation directly at the execution point. In controlled cloud-native simulations, this edge first loop cuts decisions to ~220 ms (≈3.4× Faster than centralized pipeline) F1≈0.89and hold the master below 10% CPU/RAM – Evidence that will detect and execute to the workload plane can make speed and loyalty free from material resources penalties.

At the original level, what does “Overview → Cause → Neutralization” mean?

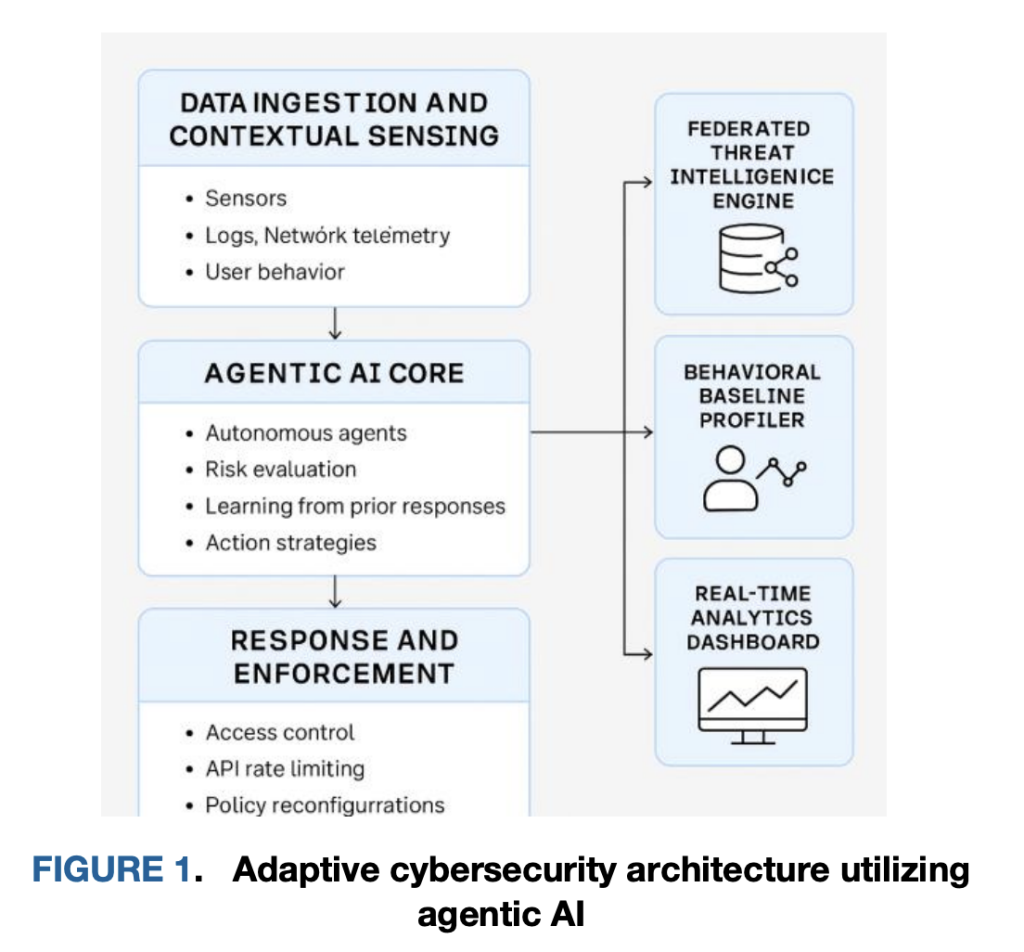

contour. Agents are deployed as border guards/daemons with microservices and API gateways. They built Behavior fingerprint From execution trace, SYSCALL path, API call sequence, and inter-service flow. This local baseline adapts to short-lived pods, rolling deployments and automation – conditions often break the perimeter control and static allowable inventory. Analysis is not only a threshold for counting; it retains structural features (sequence, timing, peer settings) and can detect zero-day sample deviations. The research team described it as the basis of continuous, context-awareness across the intake and induction layers, so each workload and every identity boundary learned to be “normal.”

reason. When an exception occurs (e.g., a high aggregation burst uploaded from a low-trust principal or an API call graph that has never been seen), the local proxy mixes the exception scores together. Joint Intelligence– Peers learn shared metrics and model delta to generate risk estimates. The purpose of reasoning is first and foremost the edge: agents make decisions on the round trip of central adjudicators, and trust decisions are continuous, not static role gates. This is associated with zero trust (at the beginning of each request, not only at the beginning of the session) evaluates zero trust-identity and context, and reduces central bottlenecks that increase latency under load.

Neutralize. If the risk exceeds a context-sensitive threshold, the proxy executes Take local control immediately Actions mapped to the least privilege: Isolate containers (pause/detached), rotate credentials, apply rate limits, revoke tokens or tighten each way policy. Enforcement is written back to the policy store and recorded on human-readable audit reasons. The fast path here is the core difference: in the evaluation of the report, the autonomous path triggers ~220 ms relatively ~540–750 ms Used for centralized ML or firewall update pipelines that are converted to ~70% latency reduction During the decision window, there is less chance of horizontal movement.

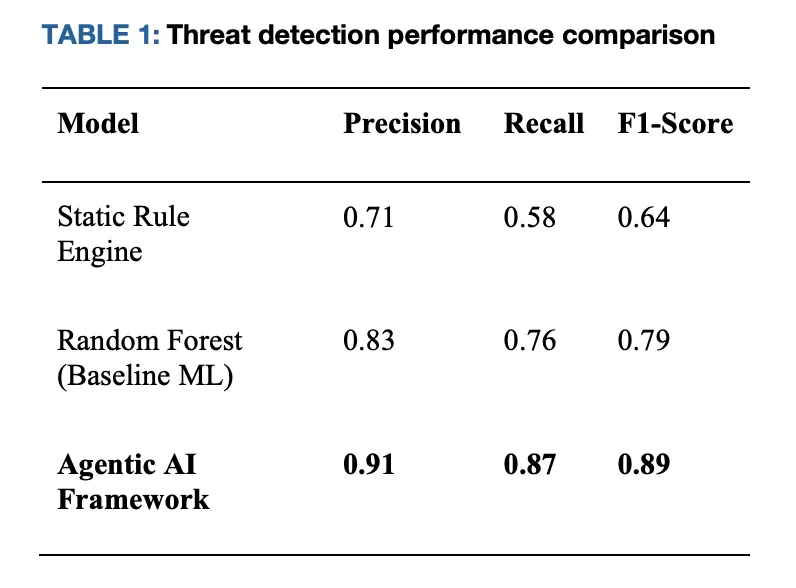

Where do these numbers come from and what is the baseline?

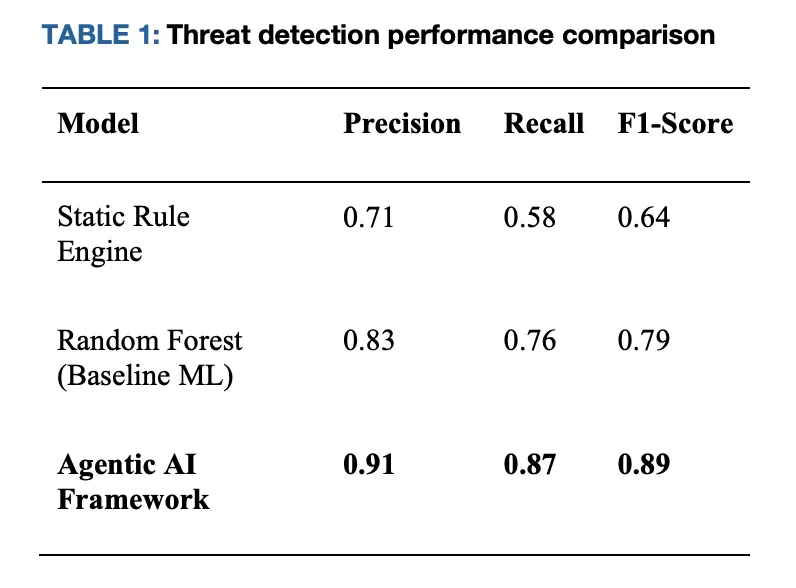

The research team evaluated the architecture in a Kubernetes-nimatient simulation spanning API abuse and lateral movement scenarios. For two typical benchmarks – (i) static rule pipeline and (ii) batch training classifier – proxy method report Accuracy 0.91 / recall 0.87 / f1 0.89while the baseline lands nearby F1 0.64 (Rules) and F1 0.79 (Baseline ML). Decide the incubation period to ~220 ms For local law enforcement, ~540–750 ms For centralized paths that need to be coordinated with the controller or external firewall. Resource overhead on host services still exists Less than 10% In CPU/RAM.

Why is this more than just a research chart for Zero Trust Engineering?

Zero Trust (ZT) requires continuous verification when requested using identity, device and context. In fact, many ZT deployments are still delayed in central policy evaluators, so they inherit control plane delay and queuing pathology under load. By moving risk reasoning and execution to The edge of autonomythe architecture pulls the ZT poses of regular policies into a group Independent, constantly learning controller This execution minimum privilege is changed locally and then synchronizes the state. The design simultaneously reduces the mean time range (MTTC) and retains decisions near the explosion radius, which helps when interpod hops in milliseconds. The research team is also formalized Federal Sharing Distribute metrics/model delta without significant raw data movement, which is related to privacy boundaries and multi-tenant SaaS.

How does it integrate with existing stacks (Kubernetes, apis and identity)?

Operationally, the agent is co-located with the workload (Sidecar or Node Daemon). On kubernetes, they can use CNI-LEVEL telemetry links for traffic functions, container runtime events for process-level signals, and Envoy/nginx spans for API gateways for request graphs. For identity, they consume claims from your IDP and calculate Continuous trust score The factor is recent behavior and environment (e.g., geographical risk, device posture). Mitigation measures are represented as basic original graphs, i.e. micro policy updates, token revocations, quotas per kit – so they can be rolled back or tightened directly. The control loop of the architecture (sensory → cause → ACT → learning) is strictly driven by feedback and supports both Humans are in the cycle (Strategy window, approval door for high popcorn changes) and autonomy Used for low impact action.

What governance and safety guardrails are?

In a regulated environment, the speed without auditability does not start. The research team stressed Interpretable decision log Capture which signals and thresholds lead to actions and have signatures and versions of the policy/model artifact. It also discusses Privacy protection mode– Keep sensitive data locally when shared model updates; mentioning private updates is an option in a stricter system. For security, the system supports Coverage/rollback and phased rollouts (e.g., similar new mitigation templates in non-critical namespaces). This is consistent with wider security efforts to threats and guardrails for proxy systems; if your organization is adopting a multi-agent pipeline, double-check the current threat model for proxy autonomy and tools.

How do reported results translate into production posture?

Assessment is 72 hours Cloud-native simulation with injection behavior: API abuse pattern, horizontal motion, and zero-day sample bias. The real system will add Messier signals (e.g. noisy fireplaces, multi-cluster networks, hybrid CNI plug-ins) that will affect detection and execution timing. That is, fast path structure –Local decision-making + local bills– It’s topology – It’s inappropriate and should be saved Reduce the order of delay growth Just map mitigation measures to primitives available in the grid/runtime. In order to produce, Observe only The agent establishes the benchmark, then opens the mitigation low-risk action (quota clip, token revocation), and then the Gate High-Blast-Radius control (network slice, container isolation) behind the policy window until the confidence/coverage metric is green.

How does this reside in a wider proxy security environment?

There are increasing research on ensuring agent systems and using agent workflows for security tasks. The research team discussed here is about Defense through proxy autonomy Close to workload. Meanwhile, other working tackles Threat Modeling of Proxy AI,,,,, Protect A2A protocol usageand Agent vulnerability testing. If you adopt this architecture, pair it with the current proxy security threat model and test harness, which works to exercise the tool’s boundaries and the proxy’s memory security.

Comparison results (Kubernetes simulation)

| Metric system | Static rule pipeline | Baseline ML (Batch Classifier) | Proxy Framework (Edge Autonomy) |

|---|---|---|---|

| accurate | 0.71 | 0.83 | 0.91 |

| Remember | 0.58 | 0.76 | 0.87 |

| F1 | 0.64 | 0.79 | 0.89 |

| Delay in decision making | ~750ms | ~540ms | ~220 ms |

| Host overhead (CPU/RAM) | Easing | Easing | <10% |

Key Points

- The “cybersecurity and immune system” at the edge. Lightweight border/daemon AI agents learn behavioral fingerprints with components of workloads (Kubernetes Pods, API Gateways) and make decisions locally and perform minimal challenge mitigation without SIEM round trip.

- Measured performance. The reported decision-making measures are approximately 220 milliseconds – approximately 3.4× Faster More than centralized pipelines (≈540–750 milliseconds) F1≈0.89 (p≈0.91, r≈0.87) in kubernetes simulation.

- Low operating costs. The overhead of the host still exists <10%CPU/RAMmaking this method for microservices and edge nodes.

- Configuration File → Cause → Neutralization Loop. Agents are continuously baselined to normal activity (profile), fuse local signals with joint intelligence for risk score (reason), and adopt instantaneous inverse controls such as container isolation, token rotation, and rate limiting (neutralization).

- Zero Trust Alignment. Decisions are continuous and background-aware (identity, equipment, geography, workload), replace static role gates, and reduce dwell time and lateral motion risks.

- Governance and security. Actions are recorded for explainable reasons; strategies/models have been signed and versioned; gated high-sting relief can be made in human and on-stage rollouts.

Summary

Treat defense as a reason Analysis, reasoning and neutralization Agent that threatens life. Reported profile –~220ms action, ≈3.4× faster than centralized benchmark, F1≈0.89, overhead <10%– Consistent with your expectations when eliminating central hop expectations and allowing autonomy to be minimized local privilege mitigation. It is consistent with the ongoing verification of Zero Trust and provides a practical way for the team Self-stability Operation: Learn normal flag deviations with joint context and include control planes in advance before moving sideways.

Check Paper and Github page. Check out ours anytime Tutorials, codes and notebooks for github pages. Also, please stay tuned for us twitter And don’t forget to join us 100K+ ml reddit And subscribe Our newsletter.

Asif Razzaq is CEO of Marktechpost Media Inc. As a visionary entrepreneur and engineer, ASIF is committed to harnessing the potential of artificial intelligence to achieve social benefits. His recent effort is to launch Marktechpost, an artificial intelligence media platform that has an in-depth coverage of machine learning and deep learning news that can sound both technically, both through technical voices and be understood by a wide audience. The platform has over 2 million views per month, demonstrating its popularity among its audience.

🔥 (Recommended Reading) NVIDIA AI Open Source VIPE (Video Pose Engine): a powerful and universal 3D video annotation tool for spatial AI

1005 Alcyon Dr Bellmawr NJ 08031

1005 Alcyon Dr Bellmawr NJ 08031