Words don’t always solve the problem when you try to communicate or understand ideas. Sometimes, a more efficient way is to simply sketch the concept, for example, a graphical circuit may help understand how a system works.

But what if artificial intelligence can help us explore these visualizations? Although these systems are often skilled in creating realistic paintings and cartoon paintings, many models cannot capture the essence of sketches: iterative processes that are gradually touched, which can help humans brainstorm and edit ideas they want to represent themselves.

The MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) and Stanford University’s new drawing systems can sketch like we do. Their method, called “Sketchagent”, uses a multi-modal model – an AI system trained on text and images, such as Anthropic’s Claude 3.5 SONNET – to turn natural language prompts into sketches in seconds. For example, it can smear the house on its own or through collaboration, paint with humans or merge text-based inputs to draw each section separately.

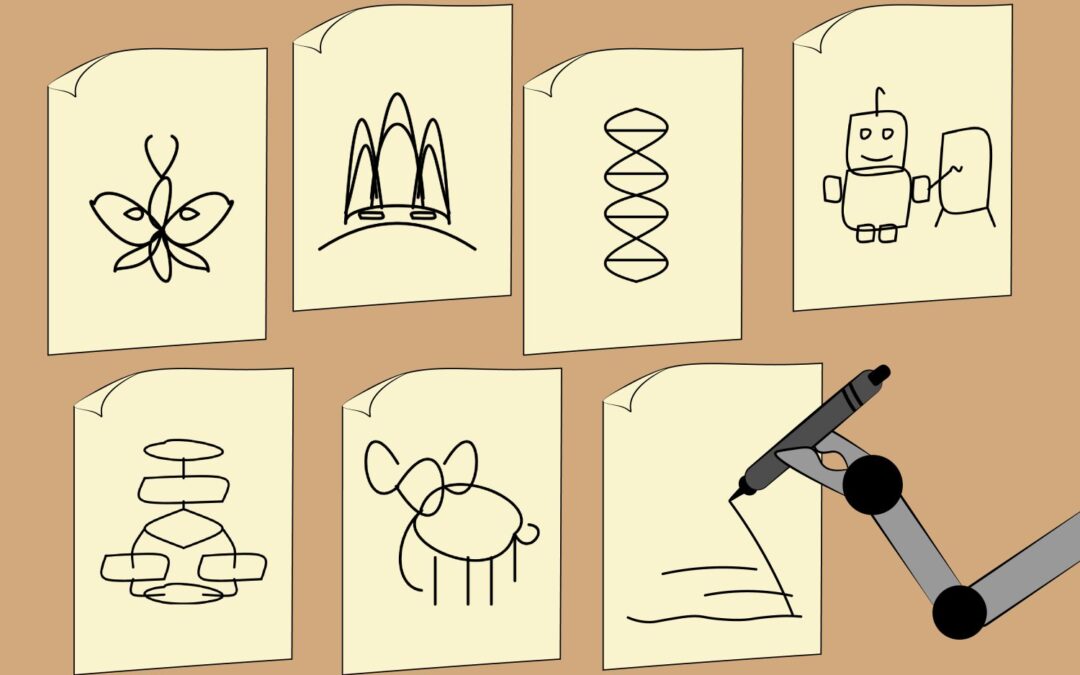

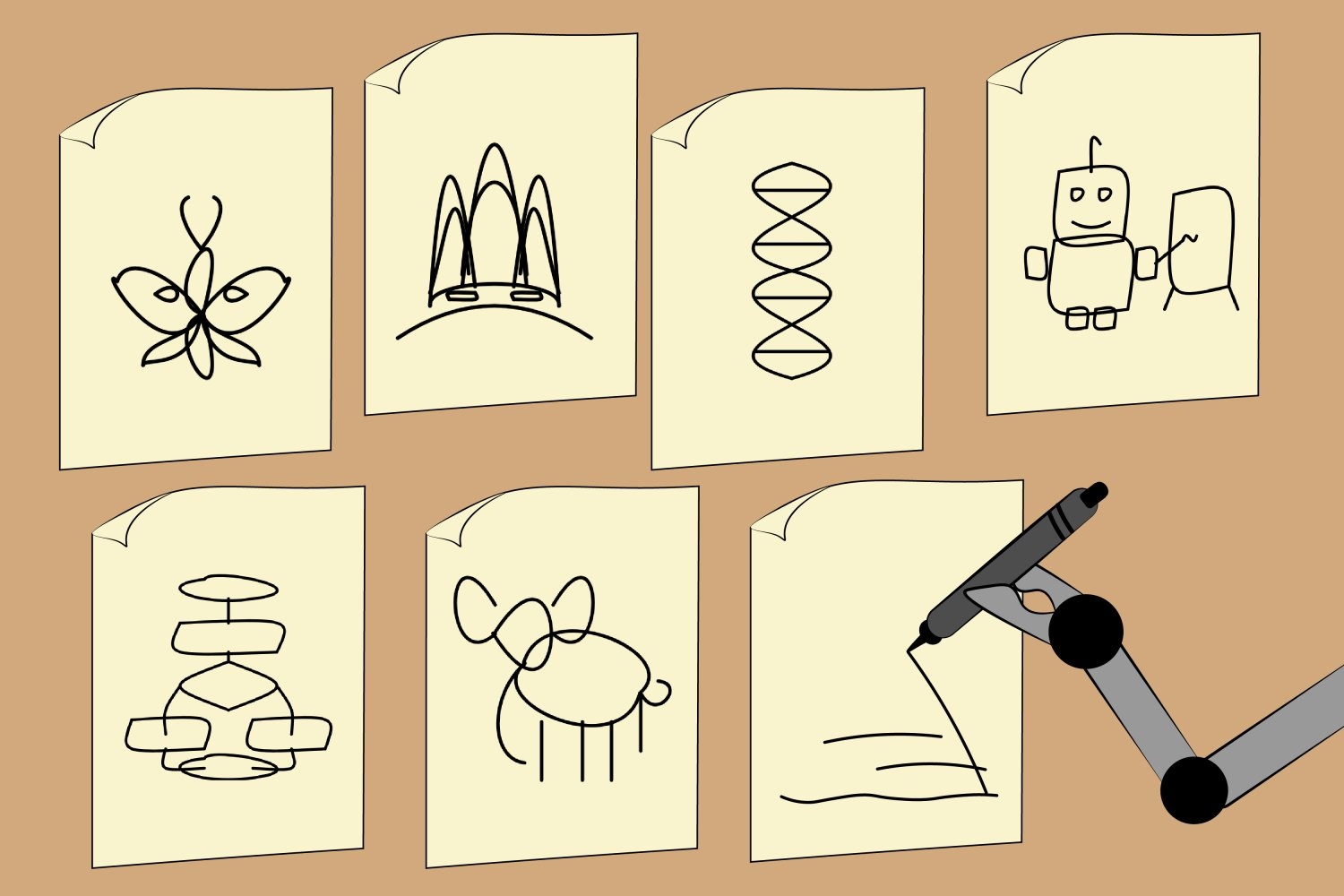

Researchers show that Sketchagent can create abstract drawings of various concepts such as robots, butterflies, DNA spirals, flowcharts, and even Sydney Opera House. One day, the tool can be expanded into an interactive art game that can help teachers and researchers draw complex concepts or provide users with fast drawing lessons.

Csail Postdoc Yael Vinker, lead author of the paper introducing Sketchagent, noted that the system provides a more natural way for humans to communicate with AI.

“Not everyone knows how much they draw in their daily lives. We might use sketches to come up with our ideas or ideas from workshops,” she said. “Our tools are designed to mimic the process and make multimodal models more useful in helping us express our thoughts visually.”

SketchAgent teaches these models to draw strokes step by step without any data—instead, the researchers developed a “sketch language” in which the sketch is translated into a numbered sequence of strokes. An example is provided for the system, showing how to draw something like a house, each stroke is marked according to the label it represents, such as the seventh stroke is a rectangle marked as a “front door” to help the model generalize to the new concept.

Vinker wrote the papers with three Csail branches – Dostdoc Tamar Rott Shaham, undergraduate researcher Alex Zhao and MIT professor Antonio Torralba, as well as Stanford researchers Kristine Zheng and Kristine Zheng and Assistant Professor Judith Ellen Fan. They will present their work at this month’s 2025 Computer Vision and Pattern Recognition (CVPR) conference.

Evaluate AI’s sketching ability

While text models such as dall-e 3 can create interesting drawings, they lack the key components of sketching: each stroke affects the spontaneous, creative process of the overall design. Sketchagent’s drawings, on the other hand, are modeled as a series of strokes that look more natural and smooth, such as human sketches.

Previous work also mimics this process, but they trained models on human-graphed datasets that are often limited by size and diversity. SketchAgent uses pre-trained language models that are well-knowledged about many concepts but don’t know how to sketch. As researchers taught the process to language models, Sketchagent began to outline a variety of concepts that had not been clearly trained.

Nevertheless, Vinker and her colleagues wanted to see if Sketchagent is working on the sketching process with humans, or if it works independently of its painting partners. The team tested their system in a collaborative mode where human and language models are committed to proposing specific concepts simultaneously. The contribution of removing Sketchagents shows that the strokes of their tools are crucial to the final drawing. For example, in the drawings of a sailboat, deleting artificial strokes representing the mast makes the overall sketch unrecognizable.

In another experiment, Csail and Stanford researchers inserted different multimodal models into Sketchagent to see which ones can create the most well-known sketches. Their default backbone model, Claude 3.5 sonnet, generates the most human-like vector graphics (basically text-based files that can be converted to high-resolution images). It outperforms models such as the GPT-4O and the Claude 3 Opus.

“Claude 3.5 sonnets outperform other models such as GPT-4O and Claude 3 Opus, which suggests that the model does a different way of visually relevant information.”

She added that Sketchagent could become a useful interface for working with AI models beyond standard, text-based communication. “As models evolve in understanding and generating other ways, such as sketches, they open up new ways for users to express ideas and receive responses that feel more intuitive and human-like,” Shaham said. “This could greatly enrich the interaction, making AI more accessible and universal.”

Although Sketchagent’s ability to draw is promising, it is unable to make professional sketches yet. It uses sticks and doodles for simple conceptual representations, but strives to doodle logos, sentences, complex creatures such as unicorns and cattle, and specific characters.

Sometimes, their model also misunderstands the user’s intentions on collaborative drawings, such as when Sketchagent draws two headed rabbits. According to Vinker, this may be because the model breaks down each task into smaller steps (also known as “chain of thought” reasoning). When working with humans, the model creates a painting plan and may misunderstand which part of the human outline. Researchers can perfect these drawing skills by training synthetic data from diffusion models.

Additionally, Sketchagents usually require several rounds of prompts to produce human-like graffiti. In the future, the team’s goal is to make it easier to interact and sketch using multimodal models, including refining their interface.

Nevertheless, the tool suggests that through step-by-step human collaboration, various concepts can be drawn like humans, resulting in a more consistent final design.

The work is supported by a Hoffman-Yee grant from Stanford’s human-centric AI, Hyundai Motor Company, the U.S. Army Research Laboratory, the Zuckerman STEM Leadership Program and the Viterbi Scholarship.

1005 Alcyon Dr Bellmawr NJ 08031

1005 Alcyon Dr Bellmawr NJ 08031