AI agents are crucial to help engineers effectively handle complex coding tasks. However, a major challenge is to accurately evaluate and ensure that these agents can handle real-world coding schemes outside of simplified benchmarks.

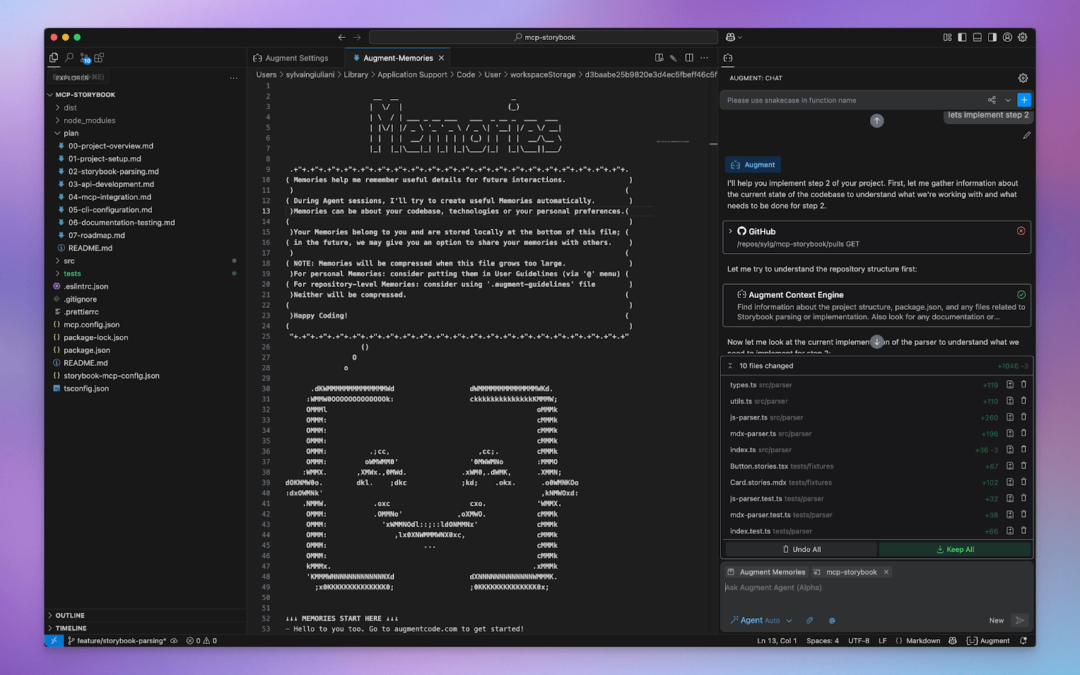

Enhanced code has announced their release Agent for enhanced SWE bench verificationspecializing in the development of proxy AI tailored for software engineering. This release puts them at the top of the open source agent performances on the SWE-Bench rankings. By combining the advantages of Anthropic’s Claude Sonnet 3.7 and OpenAI’s O1 model, Augment Code’s approach has achieved impressive results, demonstrating a compelling fusion of innovative and pragmatic system architectures.

SWE Basics Benchmark is a rigorous test that measures the effectiveness of AI agents in handling practical software engineering tasks drawn directly from GitHub problems in famous open source repositories. Unlike traditional coding benchmarks, which usually focus on isolated algorithmic problems, SWE-Bench provides a more realistic test bed that requires agents to browse existing code bases, automatically identify related tests, create scripts and iterate scripts and iterate against comprehensive regression test suites.

Augment Code’s initial submissions reached 65.4%, a significant achievement in this demanding environment. The company first focused on leveraging existing latest models, especially Anthropic’s Claude Sonnet 3.7 as a key driver of task execution and OpenAI’s O1 model. This approach strategically bypasses training proprietary models at this initial stage, establishing a strong baseline.

An interesting aspect of the enhancement methodology is their exploration of different agent behaviors and strategies. For example, they found that certain expected beneficial technologies, such as Claude Sonnet’s “mind pattern” and separate regression fixed agents, did not lead to meaningful performance improvements. This highlights nuances, sometimes counterintuitive dynamics in proxy performance optimization. Similarly, basic combination techniques were explored, such as most votes, but were eventually abandoned due to cost and efficiency considerations. However, a simple combination with O1 from OpenAI does provide incremental improvements in accuracy, emphasizing the value of the combination even when constrained.

While the success of the initial SWE-Bench submission of enhanced code is commendable, the company’s restrictions on benchmarks are transparent. It is worth noting that SWE basic issues tend to fix bugs rather than feature creation than feature creation, while providing descriptions are more structured and friendly, while benchmarks only take advantage of Python. Real-world complexities, such as browsing large production code bases and handling less descriptive programming languages, pose challenges that the SWE foundation cannot capture.

Enhancement regulations publicly recognize these restrictions, highlighting its continued commitment to optimize proxy performance outside of benchmark metrics. They stress that while cues and combined improvements can improve quantitative results, qualitative customer feedback and real-world availability remain their priorities. The ultimate goal of enhancing code is to develop cost-effective fast agents that can provide unparalleled coding assistance in a practical and professional environment.

As part of the roadmap for the future, Augment is actively exploring fine-tuning of proprietary models using RL technology and proprietary data. These advances are expected to improve model accuracy and significantly reduce latency and operational costs, thereby promoting easier access and scalable AI-driven coding assistance.

Some key points for an agent that enhances SWE-Bench verification include:

- Enhanced Code released Augment SWE-Bench validated proxy, which gained the number one among open source proxy.

- The broker brings together the human Claude Sonnet 3.7 as the core driver of its combination and Openai’s O1 model.

- A 65.4% success rate on the SWE bench highlights strong baseline capabilities.

- The counterintuitive results were found, with no considerable performance improvements in the case of expected beneficial characteristics such as “thinking patterns” and separate regression fixed agents.

- Identify cost-effectiveness as a key barrier to implementing broad integration in the real world.

- Confirm the limitations of the benchmark, including its bias against Python and smaller errors fixed tasks.

- Future improvements will focus on reducing costs, reducing latency, and improving availability through enhanced learning and fine-tuning proprietary models.

- The importance of balancing benchmark-driven improvements with qualitative user-centered enhancements is emphasized.

Check GitHub page. All credits for this study are to the researchers on the project. Also, please stay tuned for us twitter And don’t forget to join us 85k+ ml reddit.

🔥 (Register now) Open Source AI’s Minicon Virtual Conference: Free Registration + Attendance Certificate + 3-hour Short Event (April 12, 9am to 12pm)

Asif Razzaq is CEO of Marktechpost Media Inc. As a visionary entrepreneur and engineer, ASIF is committed to harnessing the potential of artificial intelligence to achieve social benefits. His recent effort is to launch Marktechpost, an artificial intelligence media platform that has an in-depth coverage of machine learning and deep learning news that can sound both technically, both through technical voices and be understood by a wide audience. The platform has over 2 million views per month, demonstrating its popularity among its audience.

1005 Alcyon Dr Bellmawr NJ 08031

1005 Alcyon Dr Bellmawr NJ 08031